Preface

The long-awaited release of Apache Spark 4.0 brings a set of enhancements, extending its features and enriching the developer's experience with this unified analytics engine. This presentation aims to highlight the notable changes brought about by Spark 4.0.

- Preface

- Introduction

- Enhancement of ANSI Mode and Data Types in Apache Spark 4.0

- Enhancing New Features in Apache Spark 4.0: String Collation and Streaming

- XML Support and User-Defined Functions in Apache Spark 4.0

- Enhancements in Profiling, Logging, and PySpark in Apache Spark 4.0

- About the special site during DAIS

Introduction

With every new release of Spark, a multitude of improvements, innovative features, and bug fixes are made, turning Spark into a formidable tool for data analytics. In this discussion, we will dive deeply into these features and enhancements, covering a diverse array of topics such as SQL, Python, Streaming, and discussing components that make Spark more user-friendly. This presentation is divided into two segments.

The first segment focuses on the various innovative features and changes incorporated into Apache Spark 4.0. The second segment delves deeper into Spark extensions and custom functions, investigating the uniqueness of Spark.

A distinguishing aspect of Spark 4.0 release is that it is based on G8. We will gradually unravel the complexities of this release, focusing mainly on new features and improvements introduced by Apache Spark 4.0. We then delve deeper into detailed insight into improving Sparks' extensibility, custom functions, and evaluating the level of Spark's autonomy.

In this introduction and agenda, we embark on a journey to understand the unique elements and significant improvements of Apache Spark 4.0. The forthcoming discussions will shed additional light on the progress of this unified analytics engine.

Enhancement of ANSI Mode and Data Types in Apache Spark 4.0

With the imminent release of Apache Spark 4.0, preparations for interactive application deployment through integrated development environments (IDE) have significantly progressed. This version emphasizes a wide range of support for languages such as Go, Rust, and Scala 3, all implemented through Sparknet GA within Apache Spark 4.0.

Moreover, a significant feature of Apache Spark 4.0 is the adoption of thin client mode by Sparknet. This provides a compact library usable only by installing spark-connect. This new library exists only in Python and eliminates the need for a Java Virtual Machine (JVM) that Java Spark adequately provides. It circumvents server-side issues, occupies a meager 1.5 megabytes of space, achieving a significant reduction from the previous version that demanded over 300 megabytes of storage.

With these changes, developers are strongly encouraged to entirely migrate their applications to Sparknet. As a result, application deployment significantly simplifies.

These enhancements and the enriched developer's experience delivered by Apache Spark 4.0 amplify the flexibility of data analytics and generate new possibilities.

Enhancing New Features in Apache Spark 4.0: String Collation and Streaming

The upcoming Apache Spark 4.0 introduces considerable improvements streamlining its functions with the integrated analytics engine and enhancing the developer experience. In this section, we will delve deeper into these enhancements - string collation and streaming.

String Collation: Enhanced Control over Sensitivity, Scope, and Locality

In Apache Spark 4.0, a new string collation feature is introduced. This feature operates in all string functions, allowing fine-tuning in areas such as sensitivity, scope, and locality, which were previously challenging to manage.

This feature impacts all string operations within Spark (lower case, upper case, substring, locale, etc.) and is also applied to functionalities such as groupBy, orderBy, and join keys. It extends to services like Delta Lake and Fontoon.

Practical Use Case of String Collation

Let's take a scenario where you need to collate specific string values within a table. Older Spark versions defaulted to ASCII code for sorting, which results in a lower rank given to capital letters than their corresponding lowercase equivalents. Also, certain variables (macros) are processed within the range.

However, this sorting style may seem unintuitive for some people. For instance, it might seem odd that capital 'B' ranks lower than lowercase 'a', potentially creating confusion in real-world scenarios.

Fortunately, these issues are resolved with the introduction of new features in Apache Spark 4.0. Utilizing the enhanced string collation and improved streaming functionalities, developers can conduct data analysis more accurately and efficiently. The new features in Apache Spark 4.0 hold promising attention, and we strongly encourage you to keep tabs on these exciting updates.

XML Support and User-Defined Functions in Apache Spark 4.0

The highly anticipated release of Apache Spark 4.0 is more than just an increment in the numbering system. It brings along significant enhancements that refine its functionality and improve the developer's experience with the unified analytics engine. The latest updates - evolved XML support and secured user-defined functions - caught my interest and appear promising to all Spark users.

True-Alloc and Apply-in-Arrow

Let's discuss 'True-Alloc' and 'Apply-in-Arrow,' newly added to PySpark. 'True-Alloc' is a hot topic of discussion among developers, with an API added to PySpark, making it easier to transform Python DataFrames into parallel DataFrames. Though this feature is robust, developers need to keep in mind that the parallel table exists in Java's memory, potentially affecting the memory load based on the table's size.

However, Spark 4.0 presents a solution to this memory dilemma through the 'Apply-in-Arrow' functionality. This functionality allows generating the parallel table at the worker side, which can pass objects created to other Python functions for computation, thereby improving scalability.

XML Connector

Shifting focus to the brand new 'XML connector' - catering to a large number of users who still prefer XML - Spark 4.0 introduces an XML connector to streamline processes for these users.

Explored are just the tip of the iceberg regarding numerous new features and updates. For a comprehensive list, consider exploring the official release notes.

We are all eagerly waiting for the release of Apache Spark 4.0, jam-packed with enriching upgrades. Digging deeper into these new features and advancing our journey in data analytics will indeed be a thrilling experience. However, the exploration of vast possibilities brought by this update does not end here. Look forward to delving deeper into features and improvement in the forthcoming sections.

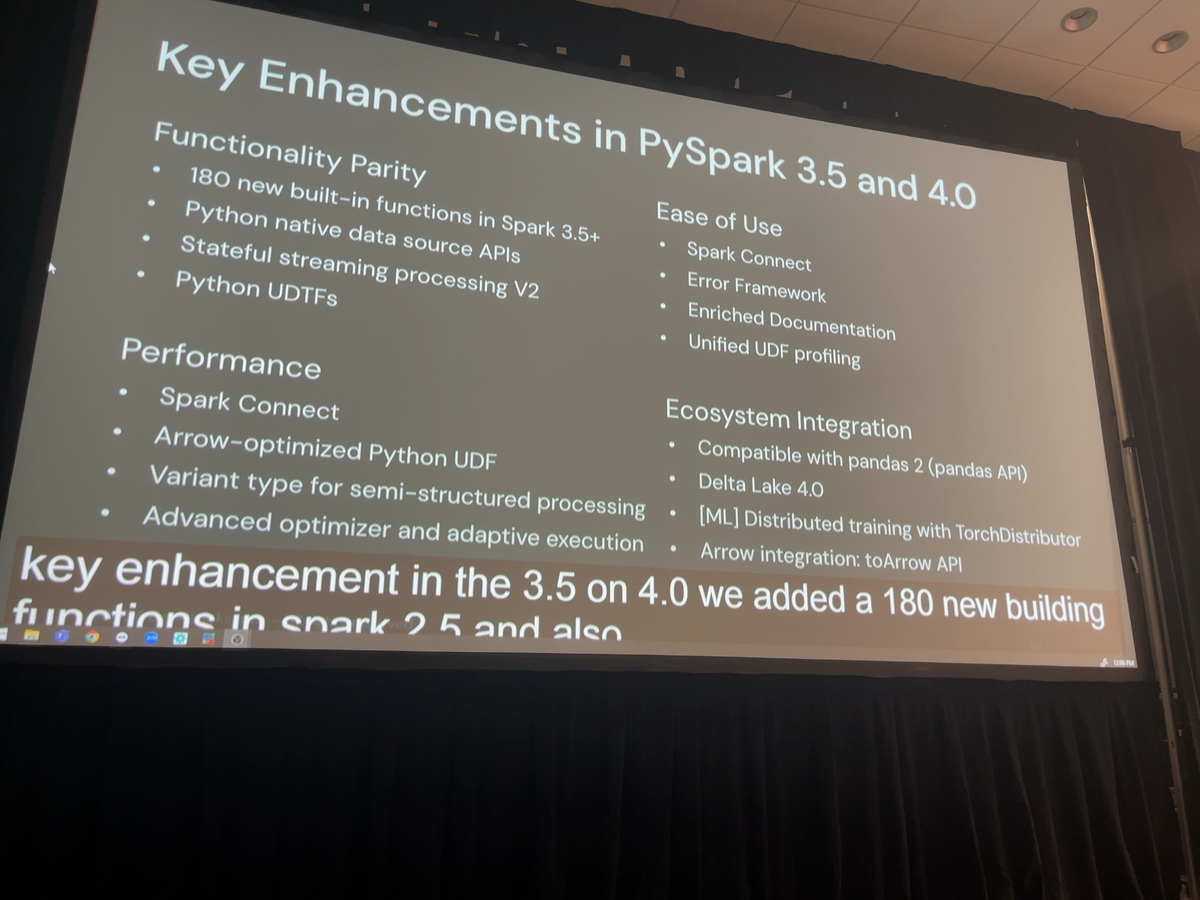

Enhancements in Profiling, Logging, and PySpark in Apache Spark 4.0

The much-anticipated release of Apache Spark 4.0 includes numerous improvements. In this article, we'll take a closer look at the enhancements made to profiling, logging, and PySpark.

First and foremost, this new version experiences a significant evolution of the profiling framework. Unified profiling proves extremely useful when a detailed analysis of code behaviour is necessary. Developers can configure Spark to verify performance using perf and conduct memory profiling using memory. Once completed, use show to display the results. If necessary, these results can be saved using dump and reset everything using clear.

In addition, you can execute a simple process of applying a pandas UFD(user-defined function) adding one to each element. This is possible for all user-defined functions. Developers will find Spark providing deep insights into the function's capabilities using the above-mentioned settings. Particularly, the performance profiling tool proves useful in understanding the function's execution time and bottlenecks.

Next is the memory profiling. The memory profiler checks each function's memory usage, and results can be used for optimization. For instance, even in technical issues within the user-defined functions resulting in memory shortage, this feature can be used to identify the problem.

Finally, Spark 4.0 enhances its logging feature. The transition to a more structured logging framework improves developers' logging experience. This allows intuitive and efficient analysis of log information and enables accurate extraction of necessary information.

In conclusion, improvements in profiling, logging, and PySpark in Apache Spark 4.0 have paved the way for a better data analytics experience. These enhancements in Apache Spark allow developers to analyze data more efficiently and effectively, which is why all of us eagerly await its evolution.

In today's session, we were able to deepen our understanding of the new features and their effects in Apache Spark 4.0. We look forward to the future releases of Apache Spark, evolving data analytics even further and making our tasks smoother.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.