Preface

Welcome to the world of MLOps. In this session, we kick off the discussion with insights and challenges faced while implementing an effective MLOps framework, joined by product owners Lavinia Guadaniolo and Alessandro from Plenitude.

The necessity of MLOps is underscored by the significant barriers many organizations face when establishing a unified framework. This supports the development and deployment of efficient and sustainable machine learning models. These challenges often lead to issues related to model reproducibility, scalability, and management.

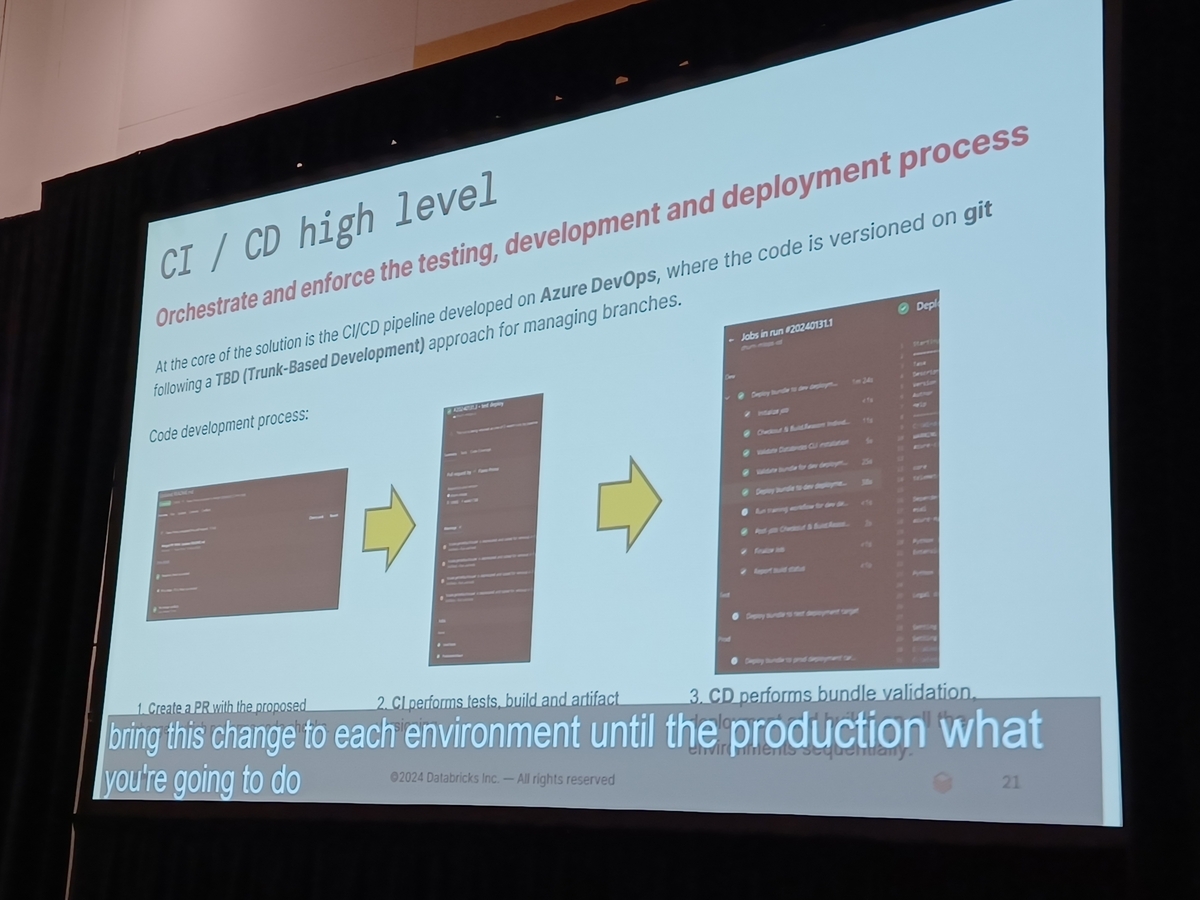

To address these barriers, the session highlights two crucial tools essential for building an MLOps framework. We delve deeply into the pivotal role continuous integration/continuous delivery (CI/CD) processes play in facilitating seamless operations of machine learning models across various environments. The discussion concludes with a practical demonstration, showing how these tools and processes are integrated to enhance the capabilities of MLOps.

- Preface

- Efficient MLOps: Pipeline Processes and Validation

- Q&A and Future Directions

- About the special site during DAIS

This segment provides a detailed examination of the early stages and challenges of adopting MLOps, setting the stage for an in-depth discussion on specific tools and CI/CD processes used within the MLOps framework. This introduction serves as a foundation for understanding the comprehensive approach necessary to efficiently navigate the complexities of MLOps.

Unity Catalog and Databricks Tools

Unity Catalog is foundational to the Databricks ecosystem, streamlining data management capabilities within the MLOps framework. It enables seamless operations across various environments suitable for specific stages of the ML model development lifecycle:

Sandbox Environment: This initial environment serves as a playground for multidisciplinary teams comprising data analysts, data scientists, data engineers, and MLOps engineers. Primarily designed for exploratory work, it allows teams to freely experiment and incubate new ideas without impacting other environments.

Staging Environment: At the staging stage, the codebase undergoes rigorous testing to ensure its effectiveness and reliability before deployment to production. This environment acts as a crucial checkpoint to verify whether the proposed solutions are robust and scalable enough to handle real production demands.

Production Environment: The production stage is where thoroughly tested applications and models are deployed. This environment reflects the operational standards and business objectives of the organization, focusing on maintaining the highest levels of performance and stability.

Unity Catalog significantly contributes to the governance and security of data flow through these transitional stages, ensuring consistent adherence to access management, data handling policies, and compliance measures. Integration into the MLOps framework simplifies managing complex data landscapes and enhances the overall efficiency of ML model deployments.

In the realm of machine learning (ML), the implementation of continuous integration and continuous delivery (CI/CD) pipelines using Databricks is positioned as a cornerstone for efficiently managing the model lifecycle from development to deployment. This section discusses the strategic setup of various environments (development, staging, operational) and outlines a clear path from model conception to operational deployment.

Starting with the essential configuration file in Databricks, databricks.yaml, encapsulates job definitions and bundle names, specifying targeted workspaces for each pipeline stage (development, staging, operation). This configuration is crucial for ensuring smooth transitions and consistency across different stages of model development and deployment.

Next, we focus on managing model workflows through workflow asset.yaml. This file becomes pivotal in defining and scheduling jobs in Databricks. It includes orchestrating notebook executions and coordinating scheduled tasks, particularly addressing the needs of scalable ML projects.

Specific task settings are then highlighted, analyzing data preparation tasks—target creation and master creation in detail. These tasks are explicitly described in the file, specifying notebook paths and related parameters for execution. Once these initial tasks are completed, model training begins. Task dependencies are clearly set following the successful execution of target creation and master creation, enhancing the sequential integrity of the pipeline.

The live demonstration further clarifies how these predefined settings are operationalized within the Databricks environment, showcasing the fluid workflow transition from batch data processing to the inference stage. Observing these operations allows for valuable insights into the procedural dynamics and operational efficiency of ML model deployment using Databricks.

In conclusion, the adept implementation of CI/CD pipelines in Databricks not only enhances the efficiency of the model development cycle but also improves systemic stability across different setups, ensuring consistent and robust deployment of ML models in diverse environments. Through this strategic approach, enterprises achieve streamlined operations aligned better with business objectives, ultimately advancing the capabilities of machine learning and artificial intelligence.

Efficient MLOps: Pipeline Processes and Validation

Pipeline processes are fundamental aspects of MLOps. These processes, involving data collection, preprocessing, modeling, training, testing, and deployment, need to work seamlessly together and be managed efficiently.

Validation plays a crucial role in ensuring model performance. This session provided a detailed discussion on different types of validation techniques and their ideal implementation timelines.

As a practical implementation, the automation of pipeline construction and validation processes in the Databricks environment was demonstrated. This setup is essential for quickly adapting to real-time data changes and maintaining model accuracy and effectiveness.

Efficient management of these pipeline processes is critical to the success of machine learning projects. The session emphasized the importance of efficient pipelines and rigorous validation in achieving reliable machine learning operations.

Q&A and Future Directions

In this session, we explored efficient development and deployment of MLOps using Databricks. Moving forward, we will answer questions from participants and delve into the future prospects of MLOps.

Question 1: How does Databricks handle critical real-time model updates?

A participant inquired about the capability for real-time model updates. The speaker explained, "Databricks supports stream processing, enabling continuous data collection and model updates. This approach ensures model accuracy by reflecting the most current data."

Question 2: Can you elaborate more on the security measures in Databricks for MLOps?

Discussion also touched on security measures within MLOps. The speaker emphasized, "Databricks prioritizes security, incorporating comprehensive measures including data encryption, stringent access control, and robust monitoring systems. These measures collectively create a secure lifecycle for model development and deployment."

Future Directions in MLOps

The speaker discussed ongoing advancements in automation and scalability within MLOps. They pointed out emerging technologies that allow the integration of diverse data sources and centralized management of multiple models. Additionally, the importance of advancing industry-wide ethical guidelines in AI operations was highlighted, ensuring responsible development and application.

This session showcased the effectiveness of using a Databricks-based MLOps framework and introduced innovative approaches that could significantly drive AI adoption across the industry. The outlined technological advancements raise substantial expectations for future developments in the MLOps landscape.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.