Introduction

This is May from the GLB Division Lakehouse Department.

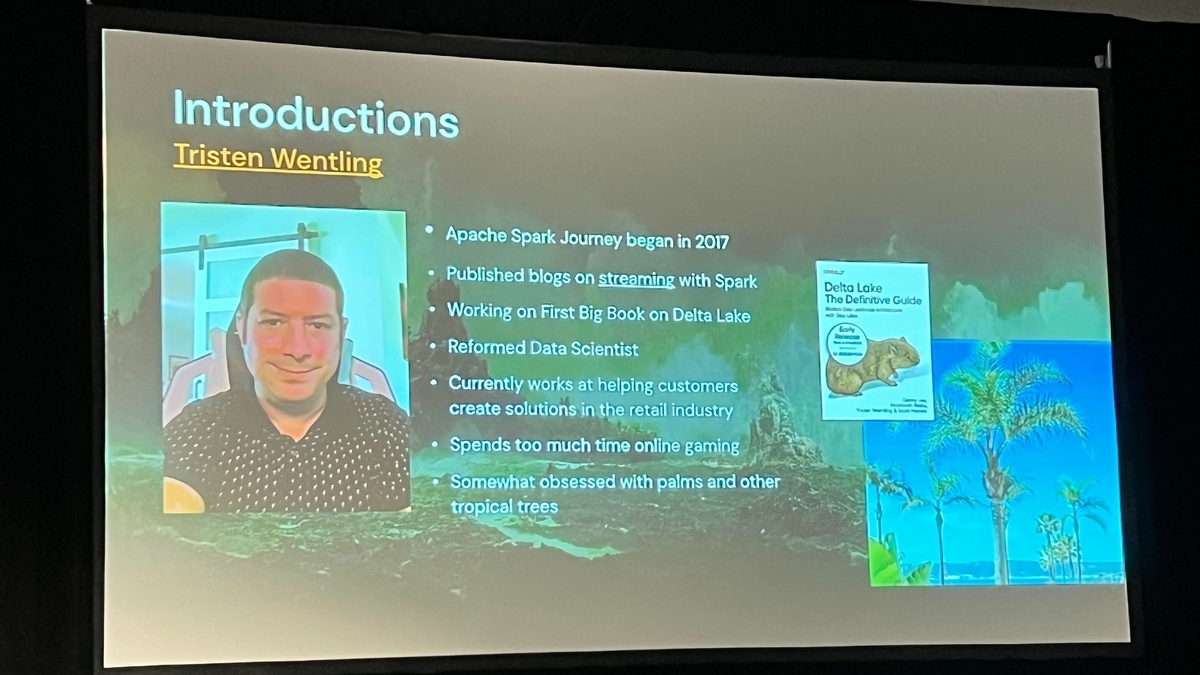

I will share "The Hitchhiker's Guide to Delta Lake Streaming" on improving the data collection process, as reported by members attending the Data + AI SUMMIT2023 (DAIS) in the field. The session was led by Scott Haines, Senior Software Engineer, Spark Delta OSS Contributor, Delta OSS Contributor and Tristen Wentling, Senior Social Architect, Delta OSS Contributor.

The theme and purpose of this talk is to explain the importance of avoiding bad processes in the data collection process, setting clear boundaries at both ends of the data collection process, and show how Delta Lake Streaming can be leveraged. The intended target audience is engineers interested in data & AI, data engineers interested in improving the data collection process, and data analysts who want to leverage ecosystems.

The importance of the data collection process and how to improve it

The importance of avoiding bad processes and setting clear boundaries at both ends of the data collection process was explained. For that purpose, a method to utilize Delta Lake Streaming was introduced.

Issues in the data collection process

Problems with the data collection process include:

- Poor data quality

- Data integrity is not maintained

- Data processing speed is slow

To solve these problems, it is important to set clear boundaries on both ends of the data collection process and avoid bad processes.

How to use Delta Lake Streaming

Delta Lake Streaming is a tool for improving the data collection process. It has the following features.

- Scalable storage

- Low latency data processing

- Improve data quality and integrity

By utilizing these features, problems in the data collection process can be resolved and efficient data collection becomes possible.

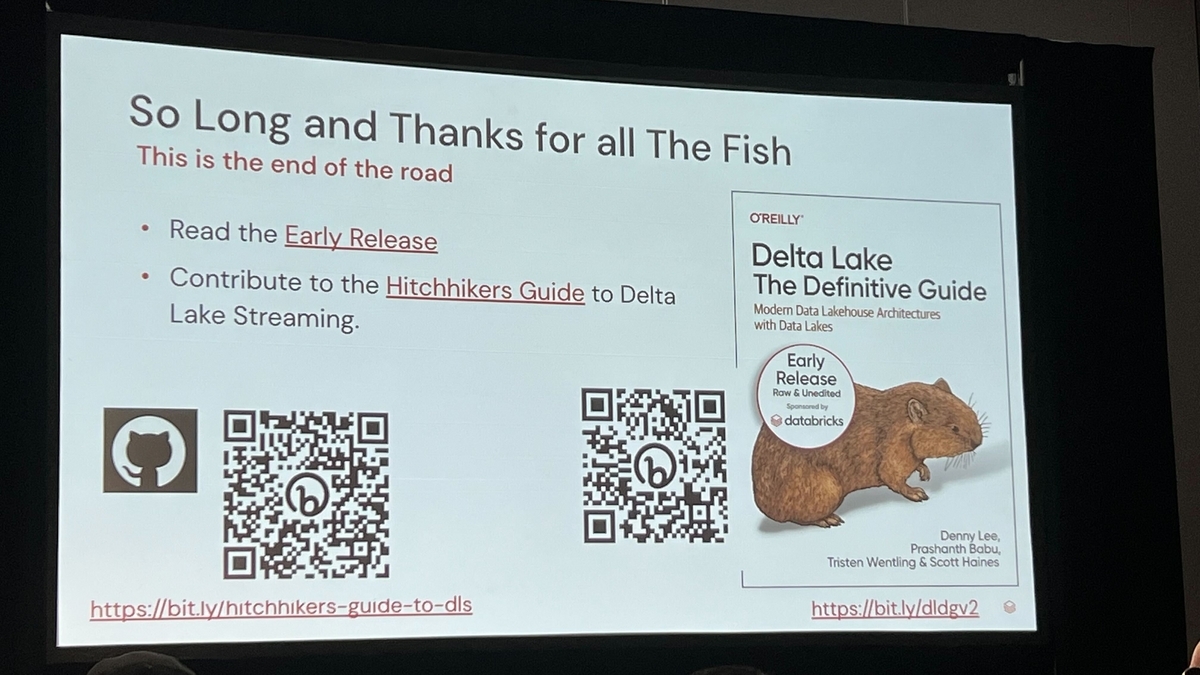

Using Hitchhiker's Guide to Delta

Hitchhiker's Guide to Delta is a detailed guidebook on how to use Delta Lake Streaming. By reading this guidebook, you will be able to:

- Basic concept of Delta Lake Streaming

- How to improve the data collection process

- Designing a practical data collection process

This guidebook will help you improve your data collection process and ensure efficient data collection.

Summary

Improving the data collection process is important to improve data quality and integrity. Leveraging Delta Lake Streaming and consulting Hitchhiker's Guide to Delta will help you achieve an efficient data collection process. By working to improve the data collection process, the use of data analysis and AI technology will become more effective, leading to business growth.

Leverage Incremental Data Collection Process and Delta Lake Streaming

By making the data collection process incremental and leveraging the collected data, you can track files and get a unified API. This article will show you how to take advantage of Delta Lake Streaming.

The Importance of Incremental Data Collection Processes

By making the data collection process incremental, you gain the following benefits:

- Easier data tracking

- Data integrity is preserved

- You can use a unified API

Leveraging these benefits will help streamline the data collection process.

How to use Delta Lake Streaming

Leveraging Delta Lake Streaming enables an incremental data collection process. Specific usage methods are as follows.

- Stream data using Delta Lake

- Version control your data

- Use features to ensure data quality

Combining these methods can result in an efficient data collection process.

About the latest concepts, features and services

Delta Lake Streaming incorporates the latest concepts, features and services. Some of them are introduced below.

Handling time series data

Delta Lake Streaming makes it easy to work with time series data. This makes it possible to efficiently analyze time series data.

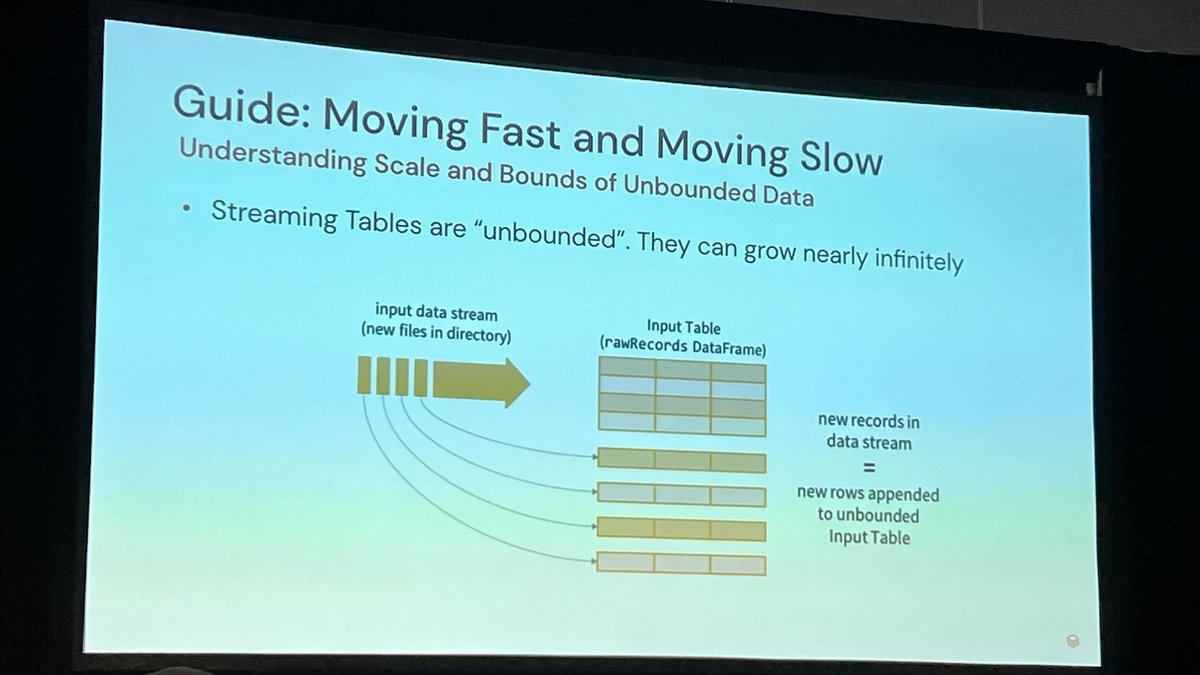

Improved scalability

Delta Lake Streaming has improved scalability. This allows efficient processing of large amounts of data.

Enhanced security

Delta Lake Streaming has enhanced security. This makes your data more secure.

Summary

Leveraging Delta Lake Streaming is key to enabling the incremental data collection process. Leveraging Delta Lake Streaming makes it easier to track data, ensures data integrity, and provides a unified API. It also streamlines the data collection process by incorporating the latest concepts, features and services.

Leveraging Delta Tables and Stream Reading

This talk explained the difference between reading a Delta table and doing a stream read. In addition, it was introduced that ecosystems other than Spark can also be used.

Difference between delta table reading and stream reading

Delta table reading and stream reading differ in how they handle data. Each feature is summarized below.

Read Delta table

- Suitable for batch processing

- Able to process large amounts of data at once

- need to wait for the process to complete

Stream reading

- Suitable for real-time processing

- Processing can be performed in situations where data is continuously flowing

- No need to wait for the process to complete, you can get the result at any time

Leverage non-Spark ecosystems

It was introduced that Delta Lake Streaming can work not only with Spark but also with other ecosystems. This allows us to combine different data processing tools to build a more efficient data collection process.

Specifically, it is possible to collaborate with the following ecosystems.

Hadoop

Hive

Presto

Flink

Kafka

By working with these ecosystems, you can set clear boundaries on both ends of the data collection process and avoid bad processes. Also, by leveraging Delta Lake Streaming, you can flexibly combine real-time and batch processing to improve the efficiency of the entire data collection process.

Summary

Leveraging Delta Lake Streaming can improve the efficiency of the data collection process. It is important to understand the difference between reading a Delta table and reading a stream, and choosing the appropriate handling method. It also enables integration with ecosystems outside of Spark, helping optimize the entire data collection process.

Production application stress testing and cost optimization

On optimizing the data collection process using Delta Lake Streaming, the talk emphasized the importance of stress testing and cost optimization for production applications.

Importance of stress testing

For production applications, stress testing is required to ensure there is no downtime. Stress testing gives you an idea of how your application will withstand real-world loads, allowing you to improve and scale your system as needed.

Importance of cost optimization

It was also explained that cost optimization is also important. By utilizing Delta Lake Streaming, the efficiency of the data collection process will be improved, leading to cost reduction. Specifically, the following points were raised.

- Reduce storage costs with data deduplication and data cleansing

- Reduce operating costs with real-time data processing

- Reduce analysis costs by improving data quality

Importance of team updates and information sharing

The talk also touched on the importance of team updates and information sharing. When using Delta Lake Streaming, it is important to share information and update within the team. It was explained that this would improve knowledge and solve problems smoothly for the entire team.

Early release for O'Reilly subscribers

Finally, it was introduced that an early release is available for O'Reilly subscribers and that feedback is sought. This will allow us to quickly incorporate the latest information and features of Delta Lake Streaming.

As described above, it was explained that stress testing and cost optimization of production applications can be achieved by utilizing Delta Lake Streaming. By streamlining the data collection process and improving data quality, we can expect to increase the competitiveness of our business.

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Translated by Johann

Thank you for your continued support!