Introduction

This is Abe from the Lake House Department of the GLB Division. I wrote an article summarizing the contents of the session that I participated in virtually at Data + AI SUMMIT2023 (DAIS).

Articles about the session at DAIS are summarized on the special site below. I would appreciate it if you could see this too.

https://www.ap-com.co.jp/data_ai_summit-2023/

Optimization of data utilization brought about by Lakehouse AI

This time, I will explain the lecture "Maximizing Value From Your Data with Lakehouse AI". In this talk, Databricks' Craig Wiley and Comcast's Jan Neumann will discuss Lakehouse AI, which brings together AI platforms and data layers to enable more intelligent actions based on data.

Now let's take a look at Lakehouse AI!

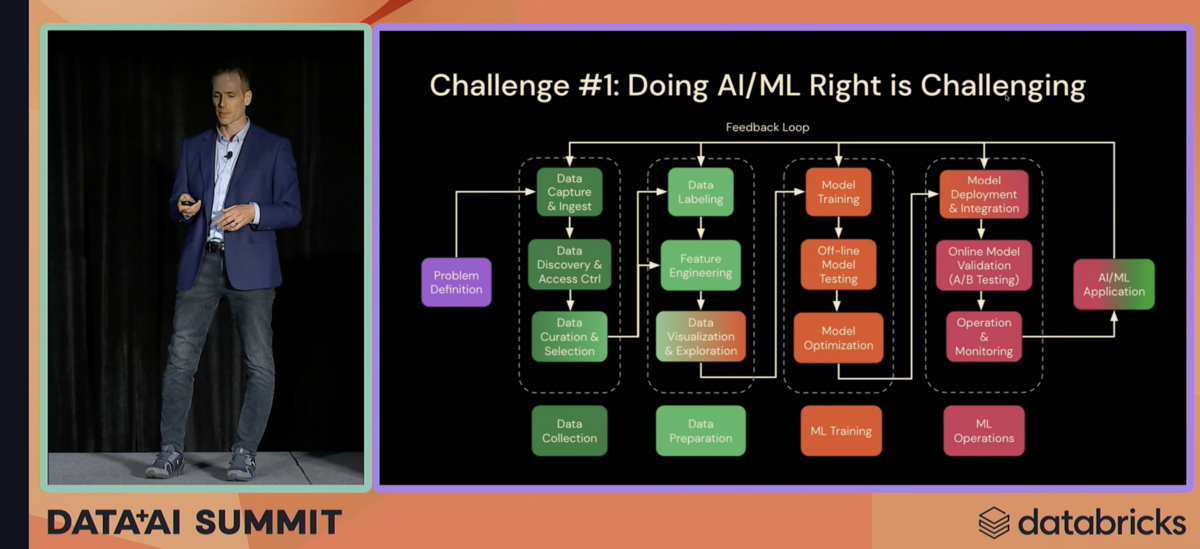

Issues with the current AI platform

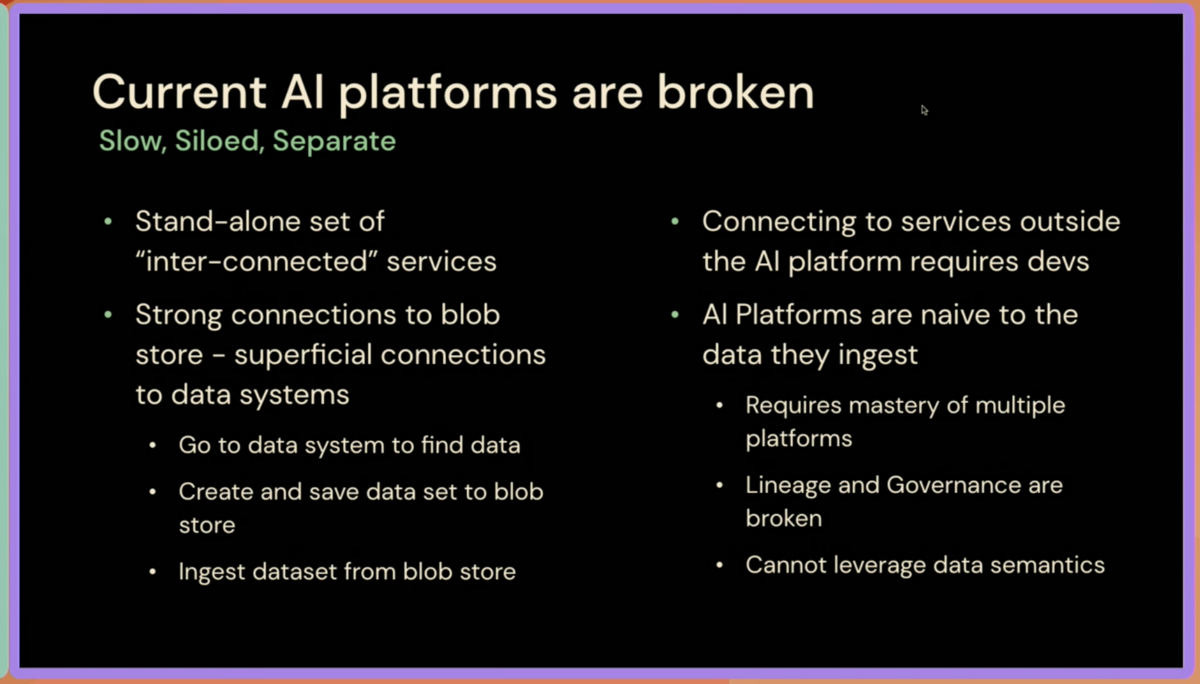

Current AI platforms are broken in isolation and suffer from inefficiencies as data is typically exported from the system to the Blobstore for processing. Specifically, the challenges are as follows.

- A lot of data movement and takes a long time

- Data integrity is difficult to maintain

- They are prone to security and privacy issues

Lakehouse AI

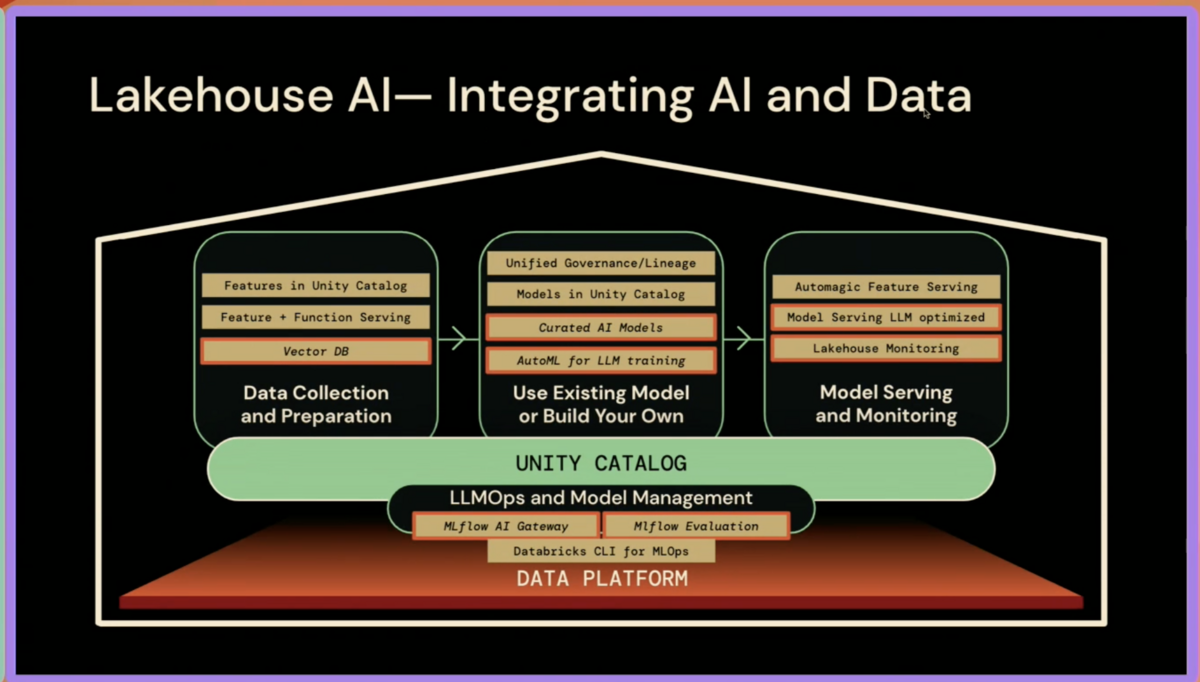

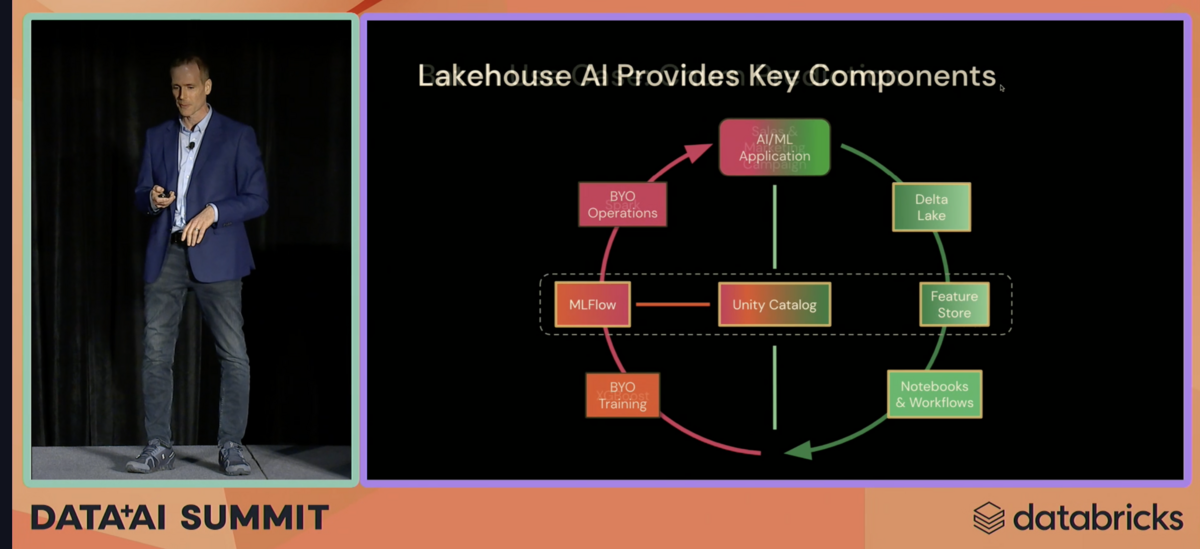

By embedding an AI platform directly into the data layer, Lakehouse AI reuses the capabilities of classic AI platforms to create a unified data and AI platform. At the heart of Databricks, the Unity Catalog acts as an Observation Catalog that auto-detects actions in the data layer, eliminating the need for manual registration and simplifying the process. Lakehouse AI tracks every action and creates a lineup graph, allowing you to make your own connections to your data.

Lakehouse AI newly incorporates vector DB and Auto ML for LLM development and is attracting a lot of attention. It's a private preview, so it's not ready to use yet, but most will be available within 4-6 weeks.

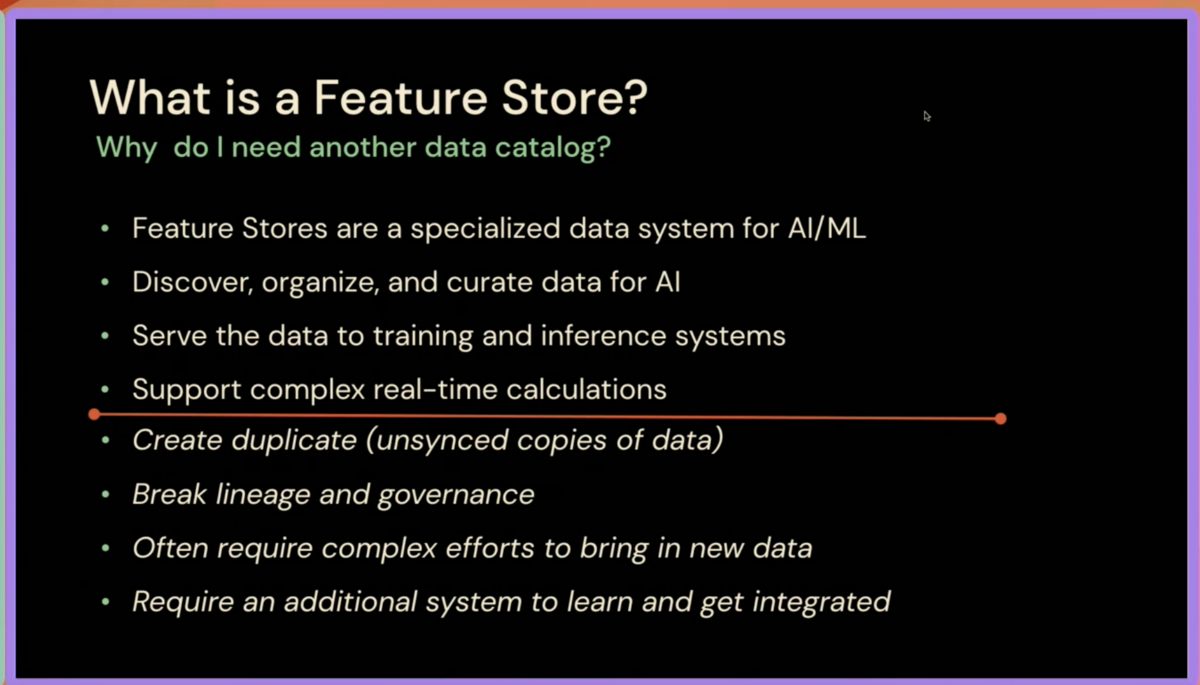

Embedding an AI platform starts with data collection and preparation, and feature stores are recommended as best practices for machine learning preparation and data acquisition. The Databricks feature store organizes and manages data, enabling it to feed high-performance training and inference systems and perform real-time feature computation. However, to solve data consistency and lineup issues, the Feature Store has been merged into the Unity Catalog. This allows the feature store to act as a Unity Catalog, making data easily discoverable, reducing the number of systems required for learning, and increasing productivity.

Latest concepts and features

MLflow is the primary framework for MLOps, with investments focused on large-scale language models, providing APIs, model gateways, model monitoring, results comparison, and more. In particular, by wrapping the SAS API, it is possible to incorporate models such as OpenAI, Anthropic, and Cohere into MLflow, including OpenAI's GPT35.

We have also made a significant investment in the Databricks CLI to code the MLOps infrastructure and provide deep integration with the CICD pipeline. This allows ML engineering teams to focus on high-value features and reap the benefits of having data, models and MLOps within the same data layer.

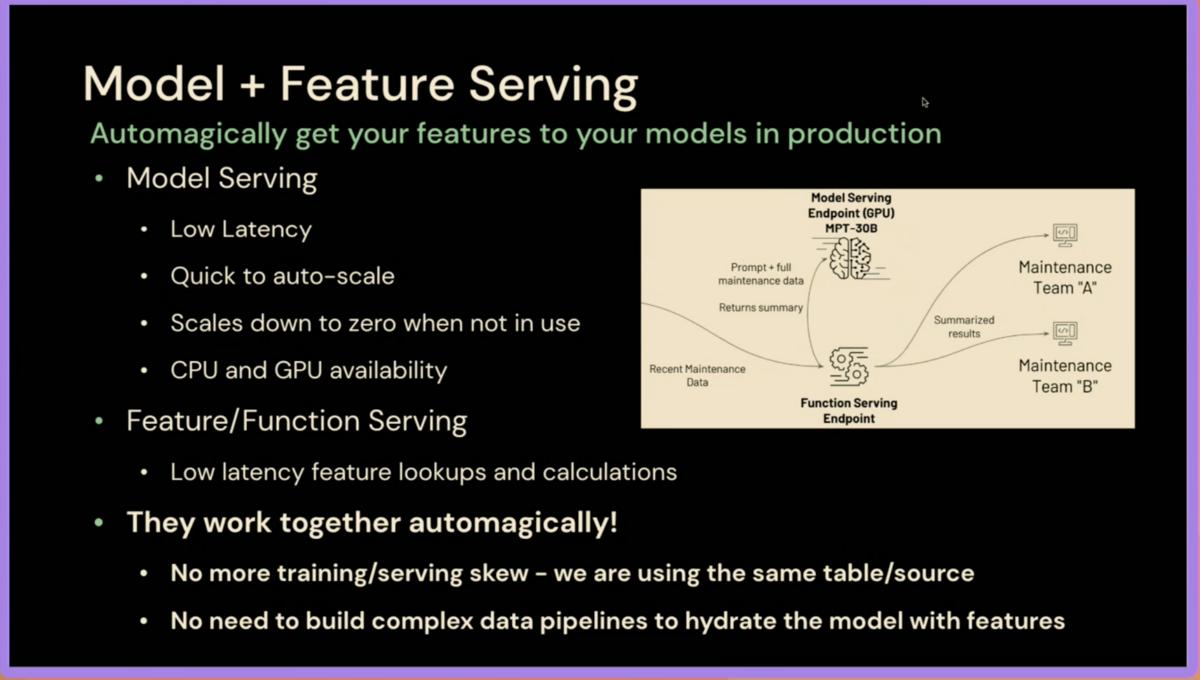

The low-latency, auto-scalable model serving product released last year can be combined with feature serving capabilities. This allows you to serve models using UC and Unity Catalog feature capabilities, eliminating the need for data pipelines. Organizations reportedly save a lot of time by taking advantage of this feature.

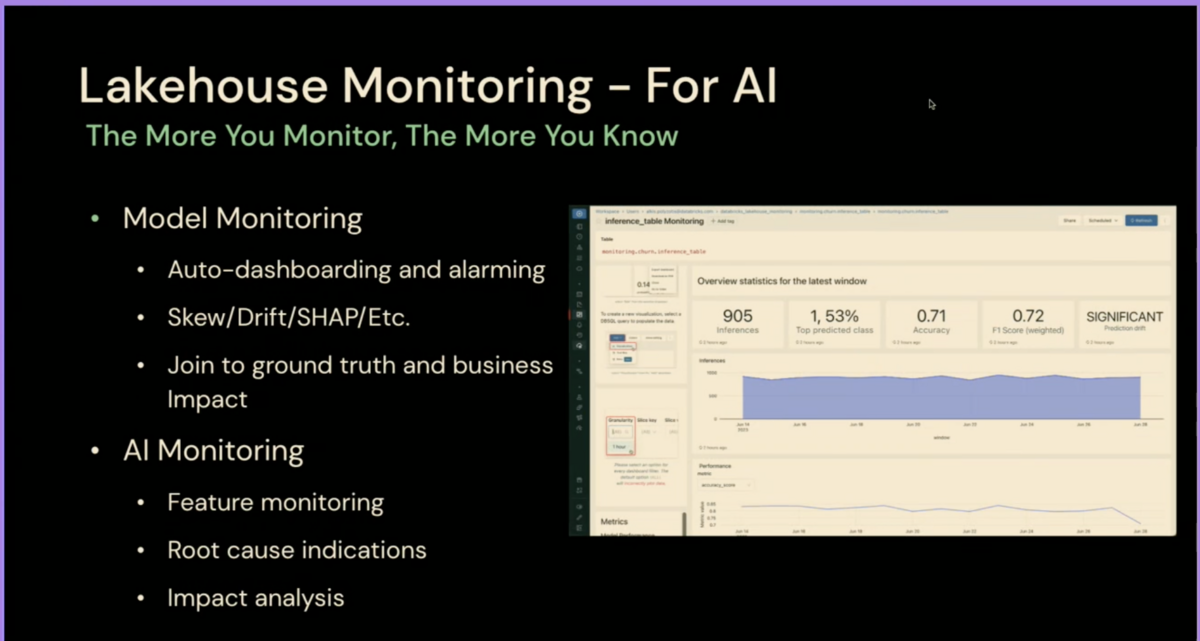

Lakehouse Monitoring, on the other hand, incorporates an AI platform into the data layer, enabling monitoring of not only the model but also the features used to create the model. This integration can detect when the overall model is not working as expected and provide the information needed to solve the problem. Such a set of capabilities demonstrates the benefits of embedding an AI platform in the data layer, offering a unique experience not available on other platforms.

Using Lakehouse AI at Comcast

Comcast uses AI across entertainment, personalized recommendation systems, video AI products, smart cameras and conversational smart intelligence assistants. All of these rely on data and AI platforms, and have been developed separately in the past, but have been plagued with duplication and difficulty in developing and deploying them.

As a solution, Comcast designed an AI platform that emphasizes a balance between innovation and standardization. Various abstractions were made to streamline the process from data collection to inference, notably the feature store linking data preparation and model training, allowing separate training and inference.

The platform uses Delta Lake for its data platform, feature store, notebooks and workflows for model development, MLflow for its model registry, and Unity Catalog for its common governance and metadata layer.

Various use cases are made possible by using the platform, and the Xfinity system in particular is used to suggest the next best course of action to the customer. The platform leverages Redis as a feature cache and NVIDIA Triton for solving PyTorch recommendation models.

One of Comcast's key goals is to democratize access to AI, allowing domain experts to handle the data preparation stage and inject knowledge as features.

Summary

Lakehouse AI is a revolutionary platform that enables more intelligent actions based on data by unifying the AI platform and data layer. It is expected to have a major impact on future business because it can solve the problems of the current AI platform and improve the efficiency of data utilization.

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Translated by Johann

Thank you for your continued support!