Preface

In this session, we explored how to efficiently scale Retrieval-Augmented Generation (RAG) and embedding computations using Ray and Pinecone. Engineers Roy (Engineering Manager at Pinecone) and Cheng Su (Engineering Manager from AnyScale's data team) utilized their extensive experience for a comprehensive elucidation on this sophisticated topic.

The session began with brief introductions about the speakers' professional backgrounds and their respective companies. After the introduction, we quickly delved into the main agenda which focused on scaling challenges within RAG systems, and deeply explored related technologies such as vector databases and embeddings.

The discussion started by clarifying the concept of Retrieval Augmented Generation (RAG), emphasizing its importance in handling large datasets effectively. Furthermore, the fundamentals of vector databases and embeddings, which are crucial to the functionality of RAG systems, were outlined. These explanations set the stage to understand why integrating these technologies significantly enhances data processing capabilities.

By attending this session, participants expanded their knowledge base on RAG and explored methods of processing large volumes of data with optimal efficiency using advanced technologies like Ray and Pinecone. This provided valuable insights into navigating the complexities of large-scale data manipulation and emphasized the significance of these technologies in modern AI solutions.

Scaling RAG with Ray, AnyScale, and Pinecone

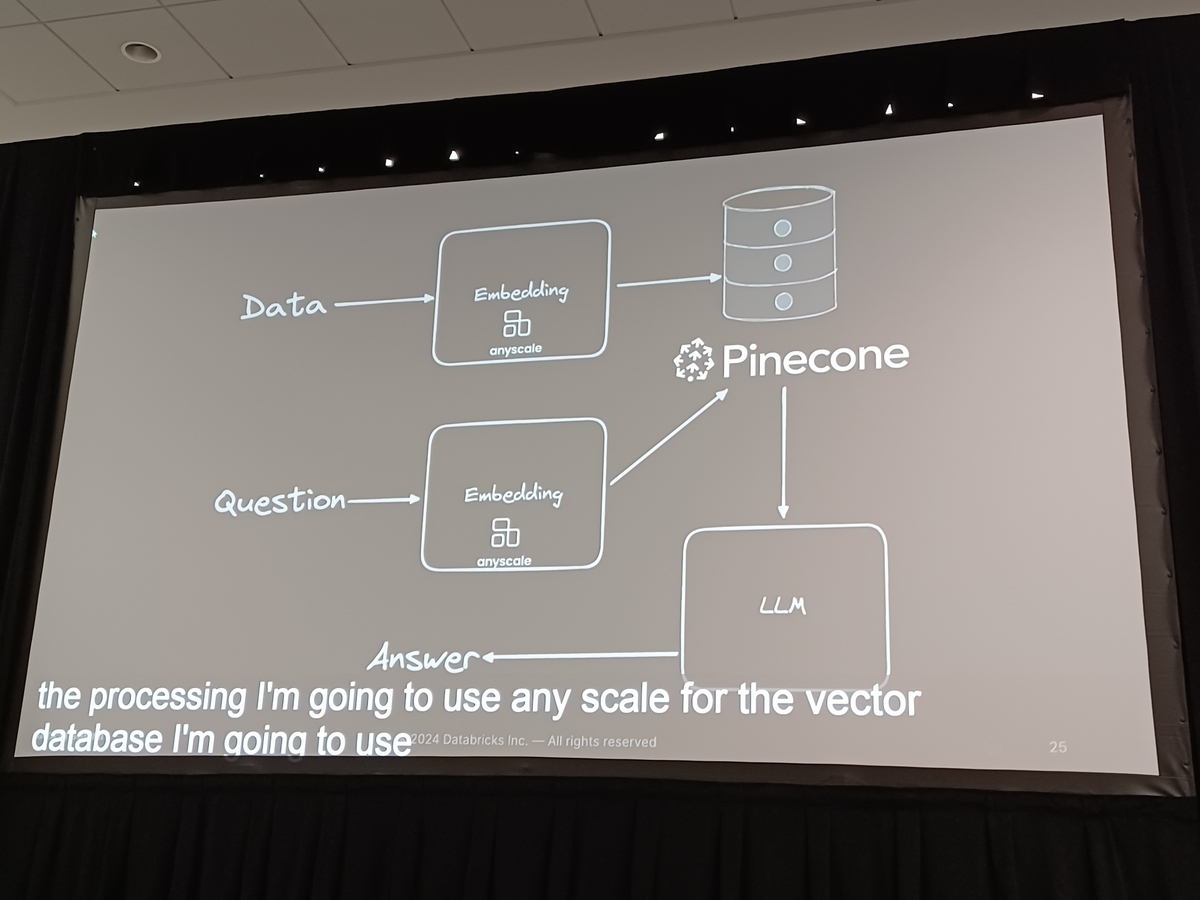

Initially, the challenge involves loading and processing large amounts of data where Ray plays a crucial role. As a distributed application framework, Ray efficiently handles and embeds large data sets, preparing for the indexing phase that follows.

Once data is successfully embedded, it is batch-uploaded for indexing on Pinecone servers. As a leading provider of vector database services, Pinecone excels in managing and retrieving large volumes of embedded vectors. This structured indexing process is crucial and has a significant impact on the system's retrieval performance.

After the indexing phase, a large-scale Retrieval Evaluation Criterion (REC) assessment is conducted. This evaluates the factual accuracy of information retrieved and generated by LLMs, ensuring generated content is factually complete and enhancing its reliability.

The session detailed the complexities involved in developing RAG-based LLM applications using Ray and Pinecone. Discussions emphasized how crucial it is to process large datasets with Ray, efficiently embed them, and optimize the data retrieval process with Pinecone for high performance and precision. This meticulous setup provides a solid foundation for LLMs, ensuring outputs are factually accurate, relevant, and reduce errors like 'hallucinations' or factual inaccuracies.

'Scaling RAG and Pinecone: A Focused Discussion on Embedding Computations with Ray and Pinecone' expanded our exploration into using vector databases and embedding processes in data-intensive environments, delving deeper into the complexities of Retrieval Augmented Generation (RAG). We also examined the historical context of RAG, its pivotal milestones, and its widespread adoption across various industries. Additionally, insights into the central role played by AnyScale in scaling this technology were gained.

History and Milestones of RAG

- 2019: Founded by creators of RAG, AnyScale tackled the challenges of distributed computing specifically for Python-centric AI applications, transforming RAG into a scalable open-source solution.

- 2020: Pioneering the release of RAG version 1.0 aimed at enhancing and simplifying the development of AI applications.

- 2022: Two years after its initial launch, RAG version 2.0 introduced, offering developers more robust features.

- 2023: Since then, the RAG community has made significant advances around ARN and AI infrastructure, with a vibrant presence on GitHub (30,000 stars) and an active base of hundreds of contributors worldwide, continually impacting the AI landscape.

Widespread Adoption of RAG Across Industries

The adoption of RAG across multiple sectors indicates its effectiveness and the trust developers place in its capabilities. From small startups to large enterprises, its deployment plays a central role in addressing complex computing and data processing needs.

This historical and industry-wide perspective not only highlights the progressive evolution of RAG but also underscores AnyScale's commitment to advancing this technology. By integrating these insights, developers and industry experts can better navigate the AI application and distributed computing landscape, leveraging the robust framework and community-driven enhancements of RAG. This session illuminated both the technical complexities and practical deployments of RAG, providing attendees with a comprehensive understanding essential for further innovation.

In the Q&A and subsequent discussions, much attention was given to integrating and scaling knowledge graphs within the RAG system. The concept of the 'Oracle Test' was highlighted, where models, given the exact documents and evidence required, typically achieve a performance score around 0.9, instead of a perfect 1.0. This emphasizes the inherent limitations and harsh challenges of fully integrating knowledge graphs within scalable environments.

Especially with the need to accommodate billions of nodes, scaling knowledge graphs presents significant challenges. Experts discussed sophisticated methods and considerable resources required to effectively scale these, and while perfection in performance might not be achievable, the utility and potential improvements provided by knowledge graphs are worth exploring within the realm of RAG applications.

Conclusion

Through this session's explorations, a comprehensive understanding of the potential and complex nature of knowledge graphs to enhance RAG systems was achieved. The ongoing pursuit for more scalable and efficient solutions is evident. These developments promise to unlock the full potential of knowledge graphs, playing a central role in advancing the capabilities of sophisticated LLMs.

This discussion serves as a valuable resource for developers and researchers focused on AI and machine learning infrastructure, enriching knowledge and strategies for implementing effective RAG systems. As the field progresses, future research and practical advancements are highly anticipated.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.