Introduction

This is May from the Lakehouse Department of the GLB Division.。

AP Communications will collaborate with on site participants and members watching from Japan to discuss how to realize a data-driven company by integrating data engineering and AI. Share "Introduction to Data Engineering on the Lakehouse". In this session, Databricks proposes an integrated platform for data engineering and AI using the new category "Lakehouse". Our target audience are data engineers, data scientists, data analysts, and corporate executives who aspire to become data-driven companies.

Challenges to data-driven enterprises and the complexity of data engineering

Leveraging data engineering and AI is essential to becoming a data-driven enterprise. However, it is not easy to integrate these technologies and utilize them effectively. In this talk, I will explain the challenges for companies to become data-driven and the complexity of data engineering, and explain how Databricks proposes a new category called Lakehouse to eliminate the dual structure of data engineering and AI.

Challenges for enterprises to become data-driven

The challenges of becoming a data-driven enterprise include:

- Data collection and organization: Companies need to collect data from various data sources and organize it.

- Data analysis and utilization: It is necessary to develop methods to analyze the collected data and utilize it for business.

- Data security and privacy: Companies should take measures to ensure data security and privacy.

Addressing these challenges requires data engineering and AI techniques, but integrating them is not easy.

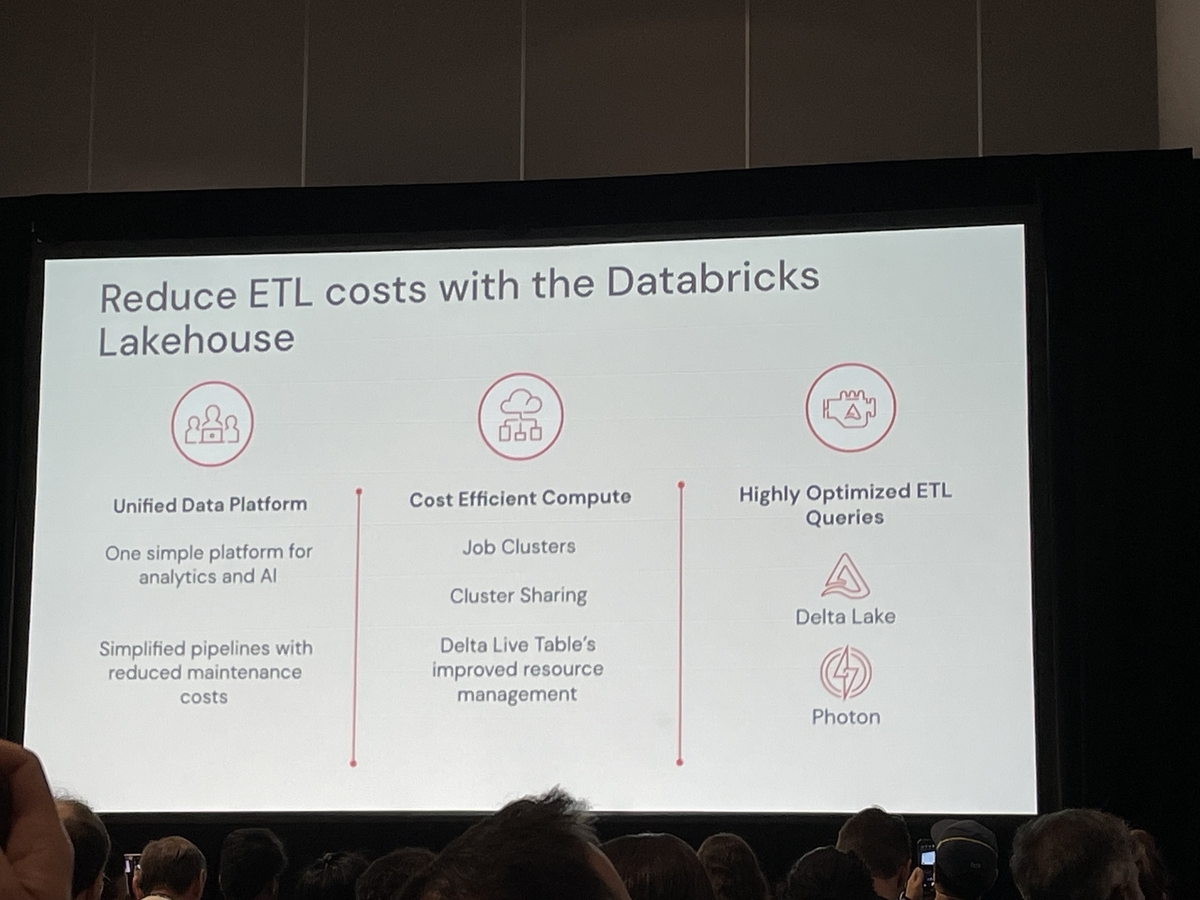

Eliminate the dual structure of data engineering and AI by introducing Lakehouse

Lakehouse proposed by Databricks is a new category to break the dual structure of data engineering and AI. Lakehouse has the following features.

- Integration of data engineering and AI: Data engineering and AI technology can be integrated and effectively utilized.

- Efficient data collection and organization: You can collect data from various data sources and organize it efficiently.

- Accelerate data analysis and utilization: Collected data can be analyzed at high speed and utilized for business.

- Enhancing data security and privacy: We provide measures to ensure data security and privacy.

With the introduction of Lakehouse, companies can address the challenges of becoming data-driven and break the duality between data engineering and AI. This will enable companies to effectively utilize data and accelerate business growth.

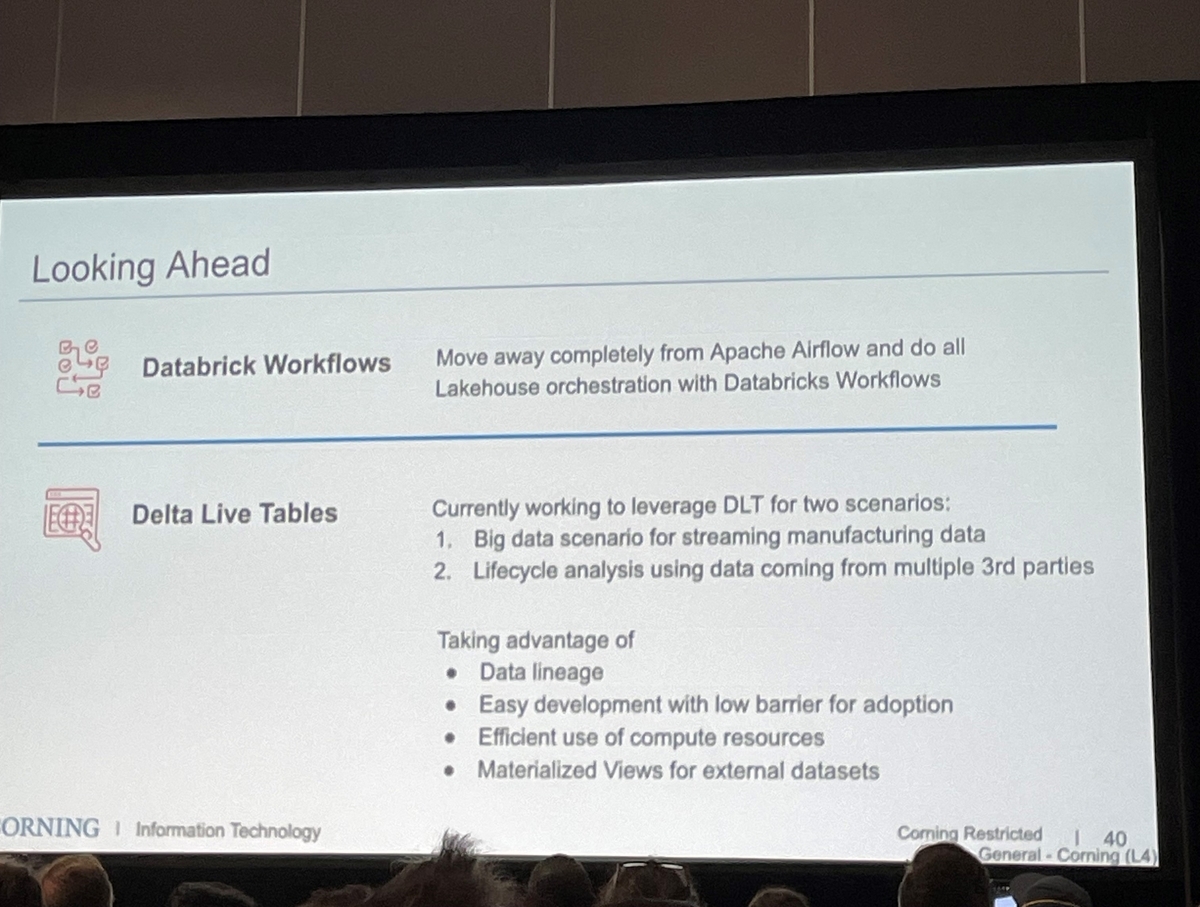

Utilizing Lakehouse and Delta Live Table

Ensuring data quality and simplifying table management

Lakehouse's solution, Delta Live Table (DLT), provides a platform that integrates the dual structure of data engineering and AI. This enables enterprises to meet the challenges of becoming data-driven. Utilizing DLT is expected to simplify table management and ensure data quality.

The main features of DLT are:

- Data versioning: DLT can track the history of data changes and perform version control. This makes it easy to refer to past data and change data.

- Schema Evolution: DLT can flexibly change the schema of data. This minimizes system-wide changes when the data structure changes.

- Improving data quality: DLT provides functionality to improve data quality. For example, you can validate or cleanse the data.

Honeywell IoT data processing case study

As an example of data processing and management using DLT, IoT data processing that Honeywell is working on was introduced. Honeywell leverages DLT to efficiently process massive amounts of data collected from IoT devices and gain business insights.

Specifically, the process is as follows.

- Data collection from IoT devices: Honeywell collects data from various IoT devices and stores it in DLT

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Thank you for your continued support!

Translated by Johann