Preface

In this session, the CEO of NCD, a data-centered organization, spoke extensively about his experiences in developing tools for data science and AI projects. The discussion was centered around their process of building the wholly private and open source RAG pipeline at a production scale. They leveraged tools such as HyperBase, Dataverse, and BBRX. This process ensures full security during data processing in AI applications.

The CEO, who has over 30 years of history in developing tools for data science projects, emphasized the importance of coding and data science in the current IT landscape. Starting from the development of standard libraries, the focus has gradually shifted to creating tools to support data science projects. More about the CEO's profile can be found at chipwalkers.com.

- Preface

- Avoiding Vendor Lock-in and Ensuring Data Portability in Production-Scale AI Pipelines

- Building a Fully Private Production Scale OSS RAG Pipeline with DBRX, Spark, and LanceDB

- About the special site during DAIS

The session began by explaining the benefits of establishing secure AI data pipelines through the use of HyperBase, Dataverse, and DPRX. It also demonstrated how these tools are implemented in real-time.

HyperBase serves as a tool for data embedding, model training, and making predictions. This tool allows securely storing predictive results in a database and securely retrieving previous results as needed.

On the other hand, Dataverse provides unlimited connectivity to various data sources and a flexible data model. Using this tool, users can easily access the necessary data and facilitate the construction of predictive models.

DPRX plays a crucial role in data embedding and model training. It particularly stands out when dealing with large amounts of data, enabling quick and accurate predictions.

In essence, these technologically advanced and user-friendly tools each have unique advantages. They can be utilized in various ways to create a large-scale, completely private, open-source RAG pipeline using AI. This session discussed how leveraging these tools can provide effective solutions to data security challenges.

In this section, we provide a demonstration of building the RAG pipeline using a community-managed Harry Potter-themed video site as an example. The aim is to conduct user behavior analysis (UBA) through relevant apps. The community holds the responsibility to determine vital information needed to maintain operational functions, such as defining UBAs or setting passwords.

Following this process, relevant Uniform Resource Identifiers (URIs) are created based on the information provided. This approach can be a breakthrough for newcomers and deliver a simple and user-friendly intervention.

We also offer interactive visual representations of user reactions which detail answers to questions like “What constitutes feedback?” and “How does value manifest itself?”. This visual approach to the information guides what actions should be taken and how these tasks would be most efficiently performed.

Through these iterative processes, we essentially create a “vision”, in other words, a roadmap to generate future prospects and ideas.

However, the above description is just part of the broader purpose of building a fully private production-scale RAG pipeline as implemented in this session. This pipeline is built and operated using open-source tools like DBRX, Spark, and LanceDB. They handle well the issues related to data security that arise when a company integrates AI into the production stream.

The biggest advantage derived from these practices is the promise of privacy protection. This is fundamental when discussing the deployment of AI at a commercial scale. The integrated use brought about by building a fully private RAG pipeline using DBRX, Spark, LanceDB greatly emphasizes this notion.

In the next section, we will explore the technical aspects and technologies involved in building this RAG pipeline. We will detail the security measures implemented, technical challenges encountered, and solutions arrived at throughout this process.

Avoiding Vendor Lock-in and Ensuring Data Portability in Production-Scale AI Pipelines

Vendor lock-in problems pose a major obstacle when companies attempt to transition from experimental setups to production adoption of AI. One factor contributing to this issue might be the dependence on Language Learned Models (LLMs) and embedding models managed by the host, which often results in losing flexibility in data management and complete control over the data.

To circumvent these difficulties, companies must assert data portability. Data portability refers to the power of organizations to manage movement and extraction of their data without constraints. This ability is extremely important, both to maintain competitiveness and to handle data appropriately without restrictions imposed by the host.

Robust data storage systems play an important role here. The storage of metadata should not be limited to merely PCs. Instead, they should have the ability to either integrate it into their system or redirect it to data lanes. This facilitates efficient management of different important records and easier access to all data.

Even when landscapes of data change, no substantial transformations should be needed on the data set. Some minor adjustments might be required, but the overall continuity of data should be kept. Flexibility in data management should not necessitate serious changes to data sets. Rather, it should promote handling data under changing conditions, and this should be done without negatively affecting their accuracy and completeness.

In conclusion, preventing vendor lock-in and promoting data portability help navigate the complexity that companies encounter during the implementation of AI. This proactive approach provides more granular control and flexibility in handling their data to organizations. Consequently, organizations can adapt to and cope with changing scenarios, expand their forecasts, and enable them to utilize more efficiently the potential of their data.

Building a Fully Private Production Scale OSS RAG Pipeline with DBRX, Spark, and LanceDB

One challenge companies face when implementing AI in production environments is data security. Typically, data is sent to Hosted Language Models (HLMs) and Hosted Embedding Models, and the resulting vectors are then stored in a Hosted Vector Database. In this discussion, the focus is on creating scalable data storage and distributed systems.

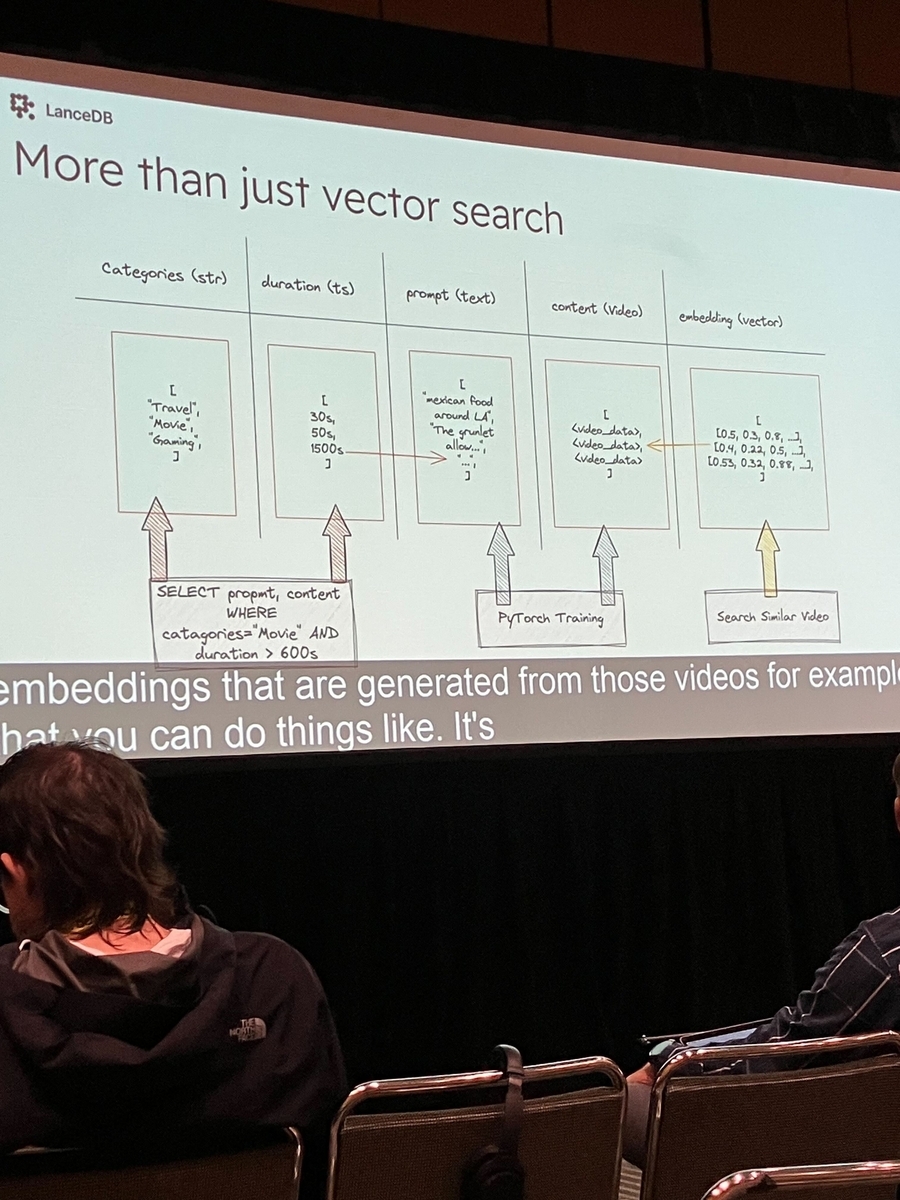

Firstly, data exists in various forms. This includes streams, network data, video content, and values generated from these sources. Different operations can be performed using this data. Users can search for specific content based on metadata, learn directly from data, or execute extensive data searches in Android call logs. All these operations are performed on data stored in memory.

A key factor in this process is the utilization of Change Data Capture (CDC). Using CDC significantly improves data handling. Let's take a closer look at how this process works.

In conclusion, creating scalable data storage and distributed systems offers solutions to data security issues and removes barriers faced by companies when deploying AI into production environments. These systems are essential for efficient management and proper handling of the data by the companies.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.