Introduction and Overview

The session "Migrating and Optimizing Large-Scale Streaming Applications with Databricks" offered valuable insights into best practices in optimizing large-scale streaming applications at the start of the conference. It was particularly informative for those interested in effectively handling massive data volumes using Databricks.

Sharif Doumi and Donghui Li, lead software engineers at Freewheel, shared their expertise on managing complex data streams, with a focus on Freewheel's application in BizWax and programmatic advertising. This introduction set the stage for understanding the complexities involved in managing high-throughput data processes that are crucial in today's digital advertising space.

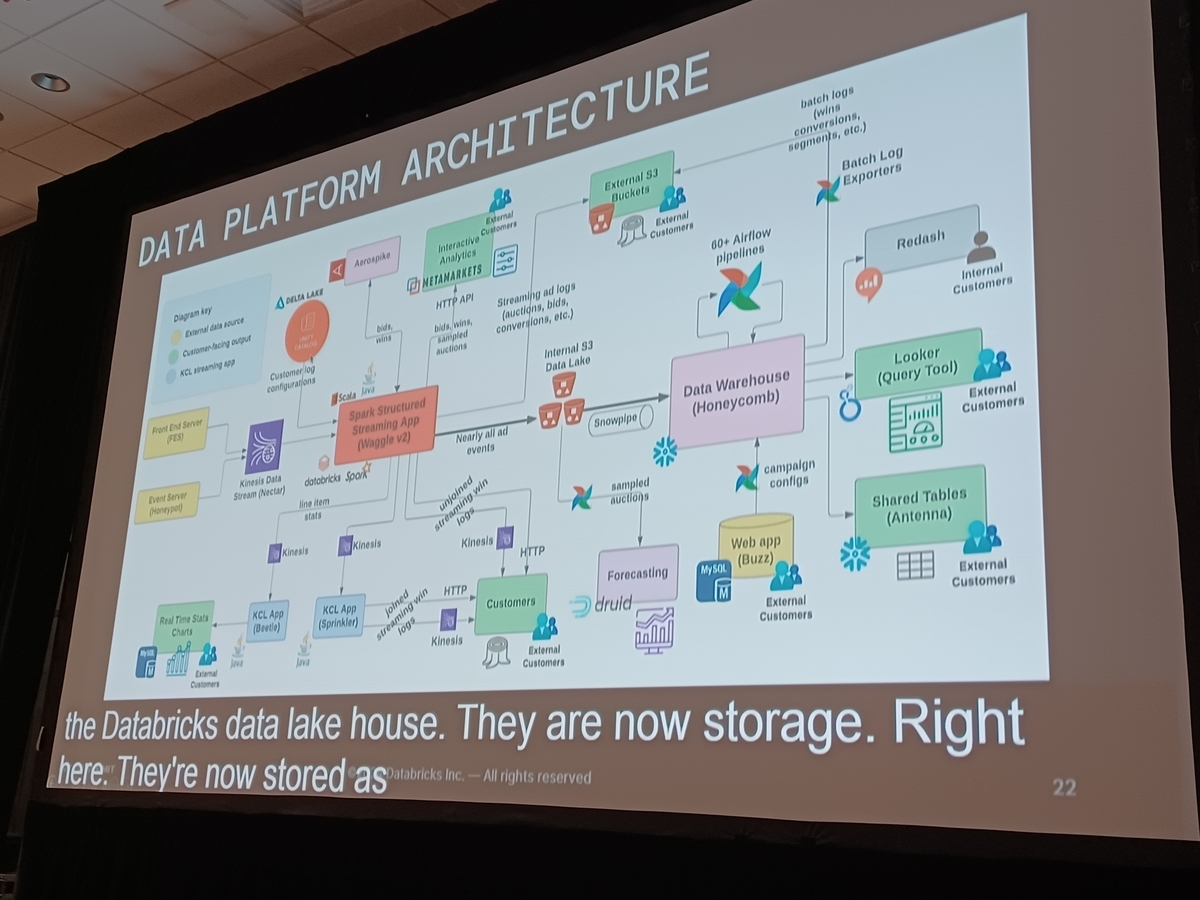

The session highlighted that Freewheel processes hundreds of billions of advertising events daily and operates at speeds exceeding 5GB/s. Presenters detailed how this data is transformed, merged, and routed to various destinations to enable real-time analytics and batch reporting, showcasing Databricks' ability to handle and optimize large-scale data operations.

Moreover, Sharif and Donghui's overview clearly outlined the technical challenges faced and how crucial Databricks' solutions were in overcoming these challenges to enhance system efficiency and effectiveness.

Overall, the introduction provided in this session served as a foundation stone for delving deeper into specific technical solutions and optimization, highlighting the significance of Databricks in the realm of rapid data processing and setting the tone for a comprehensive exploration of advanced data strategies.

- Introduction and Overview

- Migrating and Optimizing Large-Scale Streaming Applications - Methods Using Databricks

- About the special site during DAIS

Migrating and Optimizing Large-Scale Streaming Applications - Methods Using Databricks

The BizWax Data Platform and Real-Time Bidding

The speed and accuracy of real-time bidding significantly impact how data is handled and thus the outcomes. This talk detailed how the BizWax data platform enhances these systems when integrated.

Detailed Process Flow:

- Ad Request Initiation: Publishers use Supply Side Platforms (SSP) to send ad requests to ad exchanges.

- Auction Commencement: Following the ad request, the ad exchange begins the auction process by sending bid requests to numerous Demand Side Platforms (DSPs).

- Receiving Bid Responses: DSPs representing advertisers send their bids back to the ad exchange.

- Winner Selection: After bidding, the ad exchange selects and decides on the highest and most appropriate bid.

- Ad Delivery: The confirmed bid results in the winner's ad being delivered to the user's device, creating an ad impression in the process.

Within this effective sequence of operations, the BizWax data platform functions as a DSP, optimizing the entire bidding and ad delivery process, making real-time transactions smoother and more efficient.

Discussions encompassed a thorough review of the groundbreaking features of the BizWax data platform that enhance the efficiency and effectiveness of real-time ad placement, optimizing overall revenue from engagement metrics and ad placements. This session not only covered theoretical aspects but also demonstrated real-world applications and experiences in utilizing the BizWax data platform in high-speed data actions.

Conclusion: Leveraging the technical sophistication of the BizWax data platform enhances the real-time bidding process. This session provided valuable insights and demonstrated the necessity of integrating robust data platforms to efficiently manage and optimize large-scale advertising events in the streaming ecosystem.

The session "Migrating and Optimizing Large-Scale Streaming Applications Using Databricks" explored applications managing billions of advertising events daily. Particularly, the "Waggle Architecture and AWS Integration" section provided detailed insights into the integration and efficient functionalities of Waggle and Amazon Web Services (AWS).

Named after the dance honeybees perform to communicate vital information, Waggle lies at the core of our application, 처리ing vast data streams and playing a crucial role in our system. Waggle consists of over 30,000 lines of code and performs several critical functions:

- Data Loading: Reads streaming advertising events from the Nectar Kinesis stream.

- Data Parsing and Transformation: Decodes and analyzes incoming data, applying necessary filtering and transformation.

- Anonymization and Merging: Anonymizes data to protect user privacy and performs merges with related data.

- Data Routing: Routes the transformed data to various destinations, including S3, Kinesis, and HTTP endpoints.

Integration with AWS allows Waggle to execute these processes quickly and at scale, providing a robust backbone supporting everything from real-time analytics to batch reporting.

This section was rich in technical details, providing valuable insights for those considering optimization of large-scale applications using AWS. Stay tuned for the next section, where we delve deeper into specific optimization techniques!

Today's session extensively covered how to effectively migrate large-scale streaming applications to Databricks and optimize their performance. A significant change was upgrading from using the Java Kinesis Client Library (KCL) in the traditional application "Waggle" to utilizing Spark Structured Streaming in "Waggle V2". This migration included moving from a partially externally managed EC2 cluster to using Databricks' job computation clusters, a crucial step for enhancing efficiency and scalability.

"Waggle V2" is now set up in our own classic Databricks configuration within our AWS account. Additionally, we are assessing the feasibility of transitioning to Databricks Serverless in the future to accommodate specific needs related to streaming workflows.

This transition to Databricks not only simplified operations handling billions of advertising events daily but also enhanced the capabilities in data transformation, merging, and routing. This shift resolved multiple technical challenges and significantly improved operational efficiency.

Look forward to ongoing discussions where we will dive deeper into the strategies employed for both migrating and optimizing streaming applications to Databricks.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.