Introduction

This is Johann from the Global Engineering Department of the GLB Division. I wrote an article summarizing the content of the session based on reports from Mr. Ichimura participating in Data + AI SUMMIT2023 (DAIS).

In this article, we will discuss the efforts to implement a data governance program, focusing on the presentation "Increasing Trust in Your Data: Enabling a Data Governance Program on Databricks Using Unity Catalog and ML-Driven MDM." This presentation emphasizes the importance of data governance and introduces practical examples of its implementation. This blog is divided into two parts, and this time we will deliver the second part. In the first part, we introduced the specific efforts Comcast is making to implement a data governance program and the case of data governance using Databricks Lakehouse architecture and Unity Catalog. In this second part, we will explain the design of the data hub and data lakehouse built by Comcast and introduce the efforts to improve data reliability through data matching and machine learning solutions.

Let's dive in!

Data Hub and Data Lakehouse Design

Building a Data Hub Centered on Databricks Lakehouse Architecture

As Comcast advances its digital transformation using data and analytics, it has built a data hub centered on the Databricks Lakehouse architecture. This data hub is designed with data governance in mind, which can improve data reliability. The Databricks Lakehouse architecture has the following features:

- Integration of data warehouse and data lake functions

- Fast data processing and analysis capabilities

- Flexible data storage and processing options

This allows for centralized data management and maintaining data quality and consistency.

Data Lakehouse Design with Data Governance in Mind and Its Three Layers (Bronze, Silver, Gold)

In the data lakehouse design that considers data governance, data is managed in three layers. Each layer has the following roles:

- Bronze Layer: A layer that stores raw data as is. It serves as a backup and archive for data.

- Silver Layer: A layer that stores cleansed and processed data from the Bronze layer. It serves to improve data quality.

- Gold Layer: A layer that stores aggregated and analyzed data from the Silver layer. It provides business insights.

This layered structure allows for efficient data utilization while maintaining data quality and consistency. Additionally, to implement a data governance program, Unity Catalog and ML-Driven MDM (Master Data Management) are utilized. This enables metadata management and data quality improvement, enhancing data reliability.

Improving Data Reliability with Data Matching and Machine Learning Solutions

We will introduce the data matching and machine learning solutions that Comcast is working on to implement a data governance program.

Determining Similarity Between Data Points Using Multiple Distance Metrics

To improve data reliability, it is essential to accurately determine the similarity between data points. Comcast uses multiple distance metrics to determine the similarity between data points. This improves data accuracy and maximizes the effectiveness of the data governance program. Specifically, the following distance metrics are used:

- Euclidean Distance

- Cosine Similarity

- Jaccard Coefficient

By combining these distance metrics, the similarity between data points can be determined more accurately.

Development of Machine Learning Solutions for Data Normalization, Cleaning, and Resolving Duplicate Data in CRM Systems

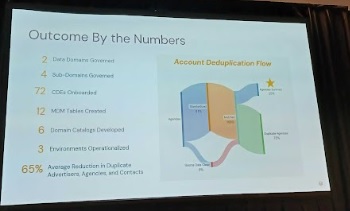

Data normalization and cleaning are essential for improving data reliability. It is also crucial to resolve duplicate data within CRM systems. Comcast is developing machine learning solutions to address these challenges. Specifically, the following methods are incorporated:

- Data Normalization: Unifying the scale and units of data makes it easier to compare and analyze data.

- Data Cleaning: Processing missing values and outliers improves data quality.

- Resolving Duplicate Data: Detecting and integrating duplicate data within CRM systems maintains data consistency.

By combining these methods, data reliability is improved, and the effectiveness of the data governance program is maximized.

Summary

To implement a data governance program, the design of the data hub and data lakehouse, as well as data matching and machine learning solutions, are indispensable. Consider the methods to improve data reliability by referring to Comcast's efforts. As data reliability improves, it will significantly contribute to business growth.

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Translated by Johann

Thank you for your continued support!