Introduction

I'm Sasaki from the Global Engineering Department of the GLB Division. I wrote an article summarizing the content of the session based on the report by Mr. Gibo, who is participating in Data + AI SUMMIT2023 (DAIS) on site.

Articles about the session at DAIS are summarized on the special site below.

Realization of scalable machine learning by integrating Ray framework and Hugging Face

This time, I would like to explain "Fine Tuning and Scaling Hugging Face with Ray AIR" in an easy-to-understand manner for Japanese readers. This talk introduced how scalable machine learning can be achieved by integrating the Ray framework, which facilitates distributed computing, with Hugging Face's advances in machine learning. Speakers of the talks are Jules Damji, AnyScale Lead Developer Advocate, and Antoni Baum, AnyScale Software Engineer. They spoke to machine learning engineers, data scientists, and developers interested in distributed computing about the need for distributed computing, Hugging Face's advances in machine learning, and an overview of the Ray framework. Now, let's take a step-by-step explanation of how to achieve scalable machine learning that they introduced!

Scalable machine learning with Ray framework and Hugging Face integration

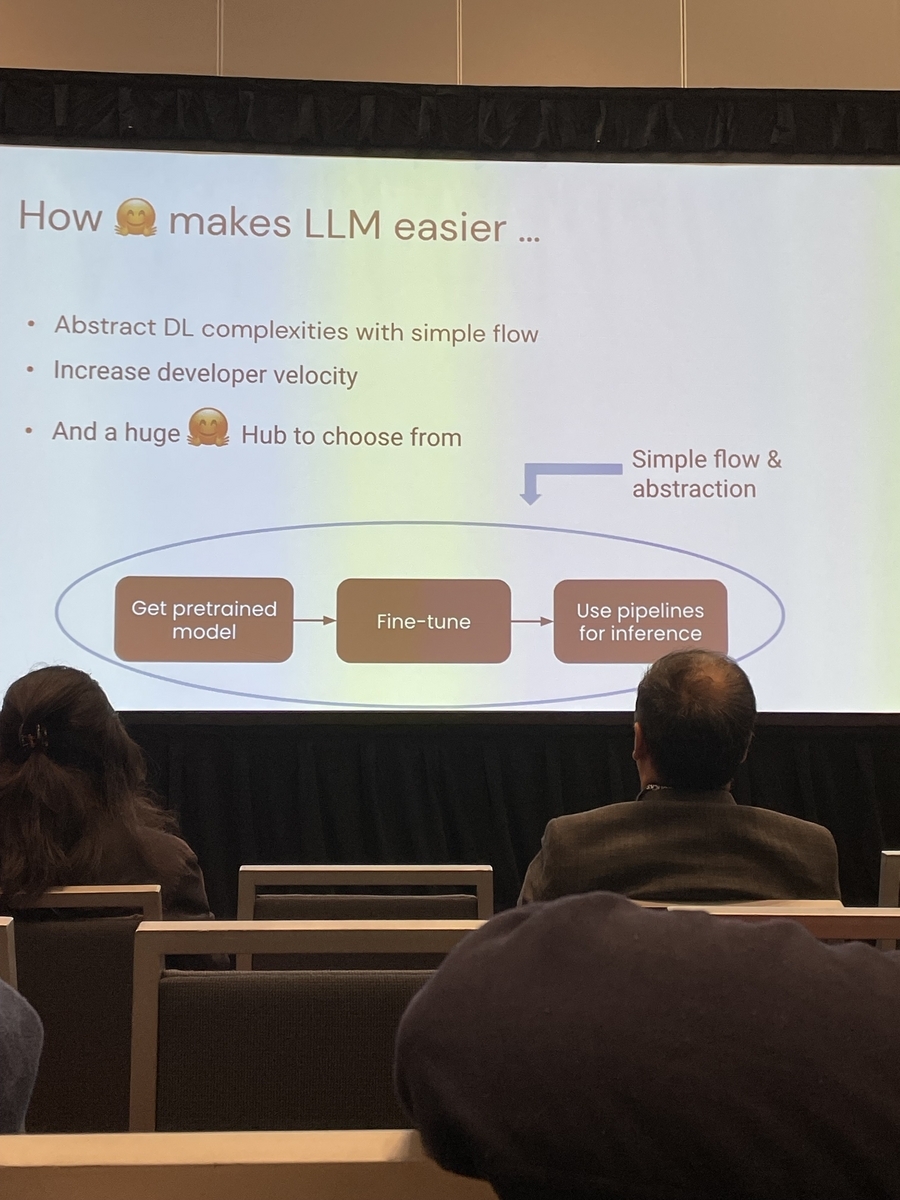

First, it was explained that the integration of the Ray framework and Hugging Face enables scalable machine learning. Specifically, it is achieved by the following procedure.

First, it was explained that the integration of the Ray framework and Hugging Face enables scalable machine learning. Specifically, it is achieved by the following procedure.

- Build a distributed computing environment using the Ray framework

- Learn and infer Hugging Face's transformer model in this environment

- Achieve efficient machine learning by scaling your environment up and down as needed

As you can see, the integration of the Ray framework and Hugging Face facilitates distributed computing and enables scalable machine learning.

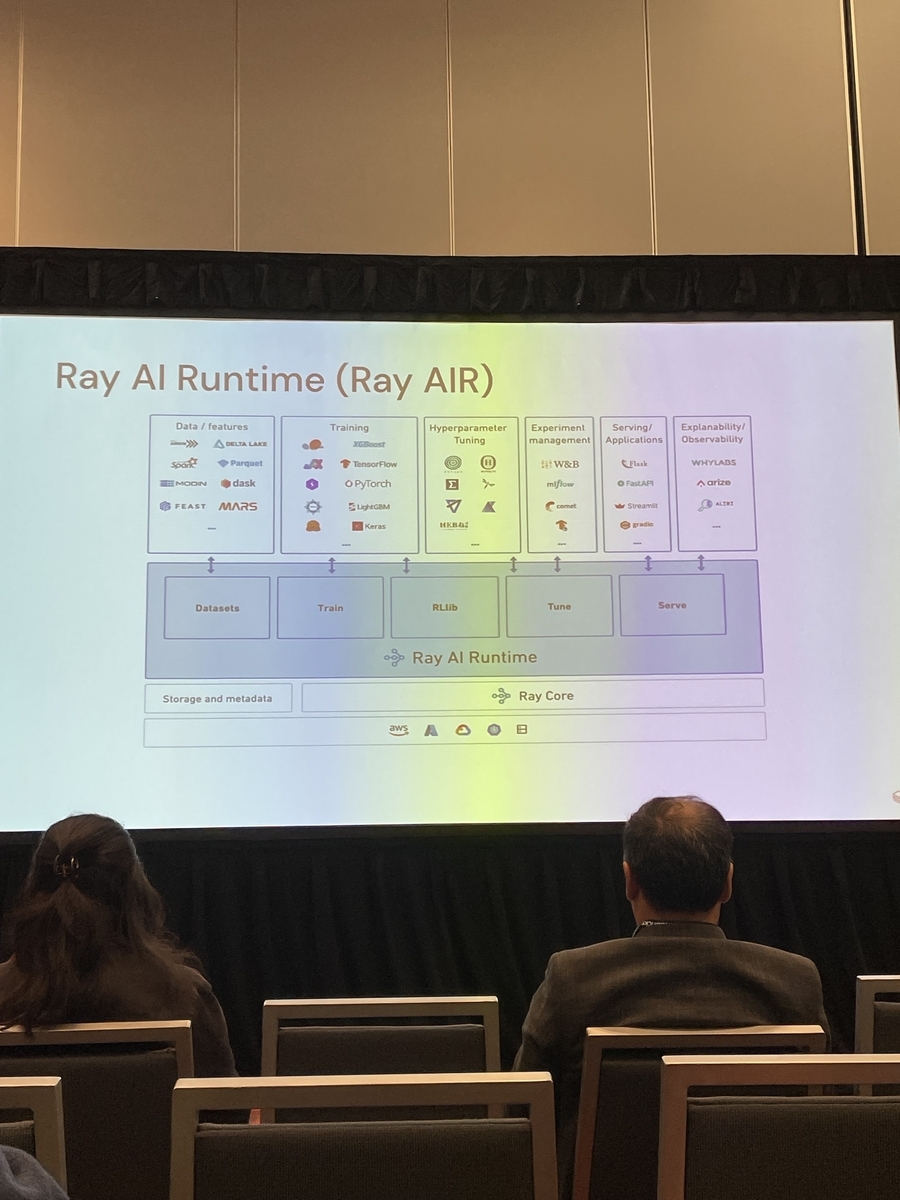

Features and Flexibility of RAI AIR

RAI AIR offers the following features and flexibility:

- Simplify distributed computing: The Ray framework makes it easy to implement distributed computing. This makes it easier to train models on multiple machines and clusters.

- Integration with Hugging Face: Hugging Face provides state-of-the-art models and datasets for machine learning tasks such as natural language processing and image recognition. RAI AIR utilizes these resources to support efficient machine learning development.

- Flexible settings: RAI AIR is designed to allow customization of various settings for model training and evaluation. This allows developers to choose the settings that best suit their needs.

Take advantage of the latest concepts and features

RAI AIR leverages the latest concepts and features to make machine learning development even more efficient. For example: - Automatic Hyperparameter Tuning: RAI AIR can utilize Ray's Tune library for automatic hyperparameter optimization. This saves developers the trouble of manually tuning hyperparameters. - Model Distillation: RAI AIR can compress large models into smaller ones using a technique called model distillation. This can improve inference speed and save resources. By leveraging these features, RAI AIR enables scalable and efficient machine learning development. We will continue to aim to improve the efficiency of machine learning development while incorporating the latest technologies and concepts.

Summary

In this talk, we introduced how to achieve scalable machine learning by integrating the Ray framework and Hugging Face. We found that RAI AIR's power, flexibility, and utilization of the latest concepts and features streamlined machine learning development and enabled scalable machine learning. In the future, it is expected that the field of machine learning will further develop due to such advances in technology. folded

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Translated by Johann

Thank you for your continued support!