Introduction

I'm Sasaki from the Global Engineering Department of the GLB Division. I wrote an article summarizing the contents of the session based on the report by Mr. Nagae, who is participating in Data + AI SUMMIT2023 (DAIS) on site.

Articles about the session at DAIS are summarized on the special site below.

How Australian Railways ARTC uses data and AI to improve operational efficiency and safety

This time, ARTC (Australian Rail Track Corporation), Australia's national railway, gave a lecture titled "Event Driven Real-Time Supply Chain," introducing how data and AI can be used to improve operational efficiency, safety, and customer understanding. Ecosystem Powered by Lakehouse”. The talk was delivered by Deepak Sekar, a technical reporter specializing in Data & AI, and Harsh Mishra, Lead Enterprise Architect, Australian Railtrack Corporation (ARTC). The content is interesting for engineers interested in data and AI, business people interested in the logistics and railway industries, and managers seeking data-driven business strategies. Let's take a quick look at how ARTC leverages data and AI.

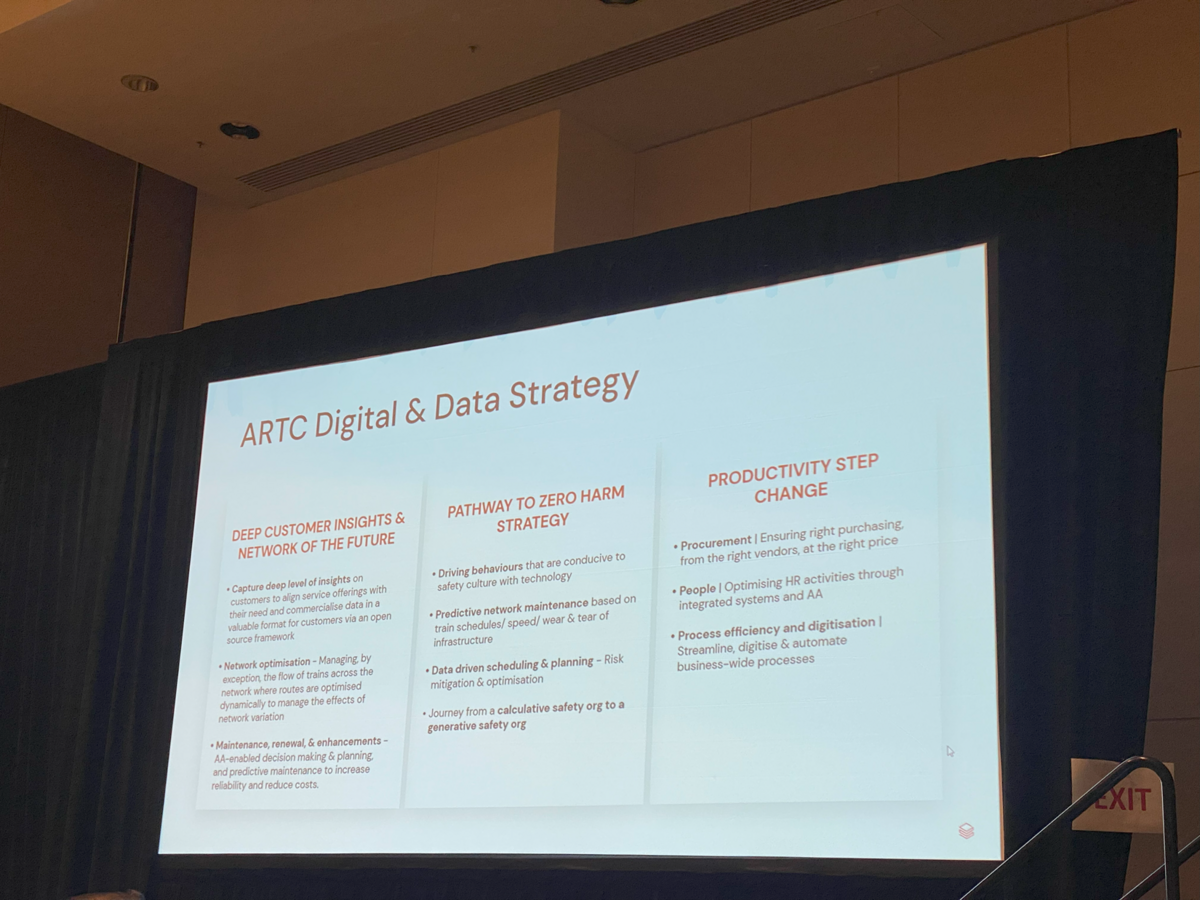

Australian Railways ARTC Data Strategy

ARTC manages an extensive rail network in Australia, spanning 8,500 kilometers. It also employs more than 1,800 people at 39 sites across five states.

ARTC aims to harness data and AI to improve operational efficiency, safety, and customer understanding. To that end, we are implementing the following initiatives.

ARTC manages an extensive rail network in Australia, spanning 8,500 kilometers. It also employs more than 1,800 people at 39 sites across five states.

ARTC aims to harness data and AI to improve operational efficiency, safety, and customer understanding. To that end, we are implementing the following initiatives.

- Building an end-to-end data layer

- Implementing a modern event-driven architecture

- Build a real-time supply chain ecosystem

Through these efforts, ARTC is using data effectively to improve operational efficiency and safety.

Real-time Supply Chain Ecosystem by Lakehouse

The lecture also introduced a real-time supply chain ecosystem that utilizes a technology called Lakehouse. Lakehouse is a new data architecture that combines the capabilities of data warehouses and data lakes, with the following characteristics:

- Combines the performance of a data warehouse with the flexibility of a data lake

- Process and analyze data in real time with event-driven architecture

- Achieve efficiency and optimization of the entire supply chain ecosystem

Leveraging Lakehouse, ARTC processes and analyzes data in real time to drive efficiency and optimization across the supply chain ecosystem.

Identifying change with technology capability models

ARTC develops a technical capability model and identifies areas in need of change. This will help guide future architectural decisions. Specifically, the following measures are being taken.

- Creating data storage, processing and orchestration layers

- Implementing a decoupled architecture that extracts data from the system's records

- Introduction of real-time way bridge capture system

Background of ARTC Data Integration and Databricks Utilization

ARTC was looking for ways to leverage data and AI to improve operational efficiency, safety, and customer understanding. This required a platform capable of batch and stream data processing, real-time data aggregation, cost-optimized storage, and integration with existing enterprise integration systems and application serving layers. Meanwhile, ARTC came across a platform called Databricks. Databricks was the platform that could meet all of ARTC's requirements. We use Event Hubs and Spark Structured Streaming to ingest streaming data sources and utilize batch sources through Data Factory.

Summary

Australia's national railway ARTC aims to use data and AI to improve operational efficiency, safety and customer understanding. To do so, we implement an end-to-end data layer and a modern event-driven architecture to build a real-time supply chain ecosystem. In addition, by utilizing a new data architecture called Lakehouse, it is possible to effectively utilize data. In addition, we use our technology capability model to identify areas in need of change. Data storage, processing, and orchestration layers are built efficiently by implementing a decoupled architecture, and a real-time way bridge capture system is introduced to improve data quality and reduce costs. Through these efforts, ARTC aims to improve operational efficiency, safety and customer understanding.

Conclusion

This content based on reports from members on site participating in DAIS sessions. During the DAIS period, articles related to the sessions will be posted on the special site below, so please take a look.

Translated by Johann

Thank you for your continued support!