Introduction

This is Chen from the GLB Division Lakehouse Department. Mr.Matsuzaki from our company recently explained about connecting Databricks to Fivetran(article Here). This time, I will explain how to connect from Fivetran to Databricks. It takes a little time and effort, so I hope it will be helpful as another option if Mr.Matsuzaki's method doesn't work.

table of contents

- Introduction

- table of contents

- About the premise

- Setting flow

- Transfer data to Databricks

- Confirmation in Databricks

- Conclusion

About the premise

Here is another method of the procedure described by Mr. Matsuzaki from our company.

The following scenarios are assumed.

- Unable to create connection from Databricks to Fivetrans

- Multiple members share a workspace on Fivetran and already have a connection (Destinations) to Databricks

Please note that the procedure presented in this blog is a little complicated.

Setting flow

Work goes back and forth between Fivetran and Databricks screens. I will explain in parts 1 to 3.

Part1:Work @Fivetran

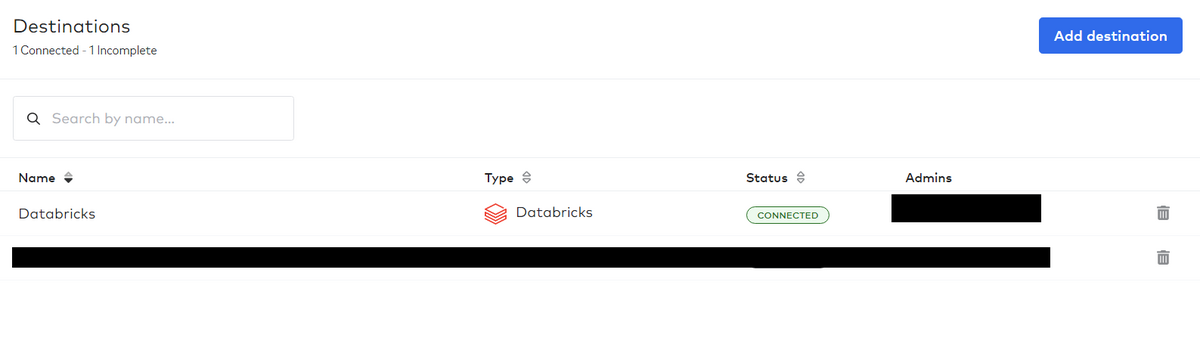

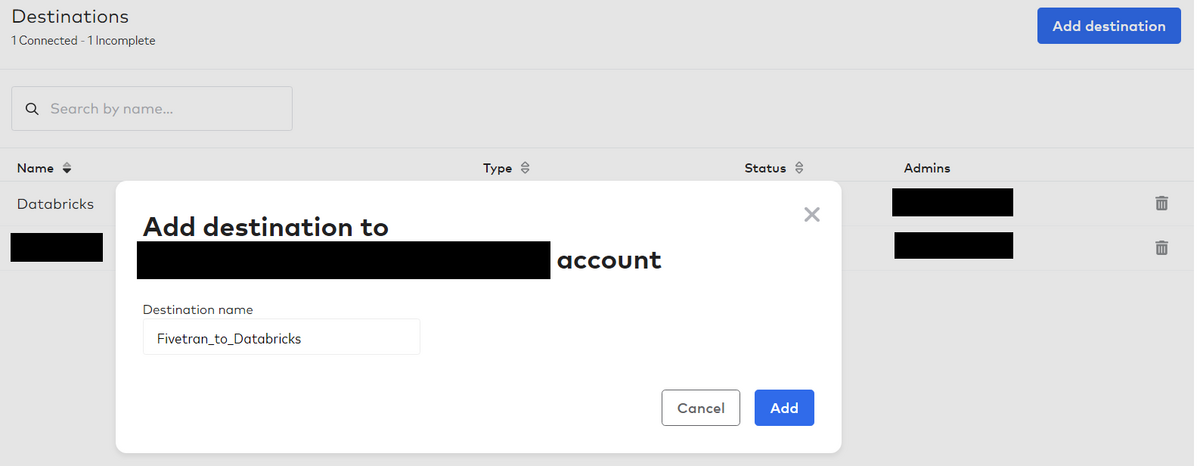

Click the "Add destination" button at the top right of the page displaying Destinations and enter a new connection (Destination Name). Here we named it "Fivetran_to_Databricks".

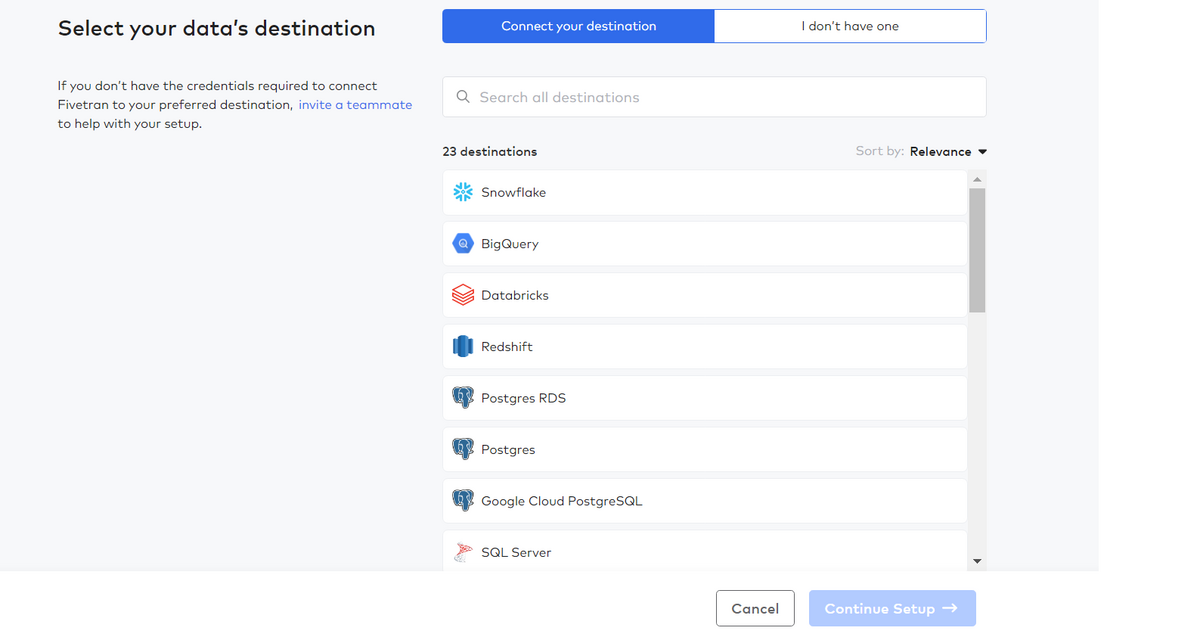

Go to the screen below and select "Databricks" as the Destination and proceed to "Continue Setup".

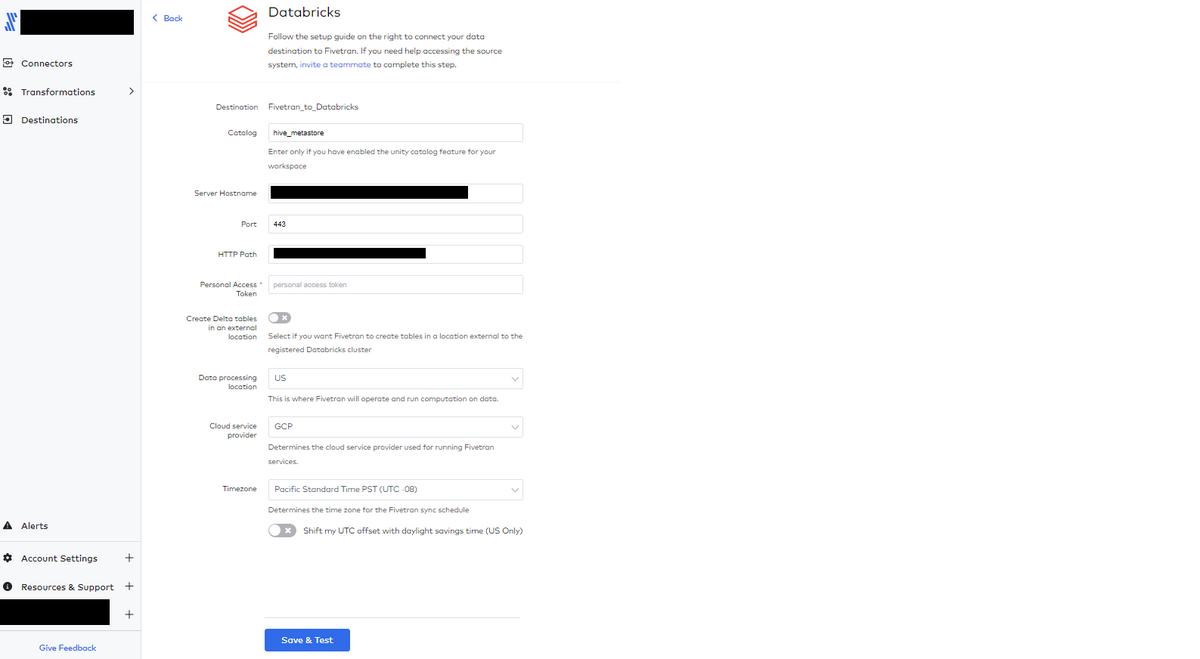

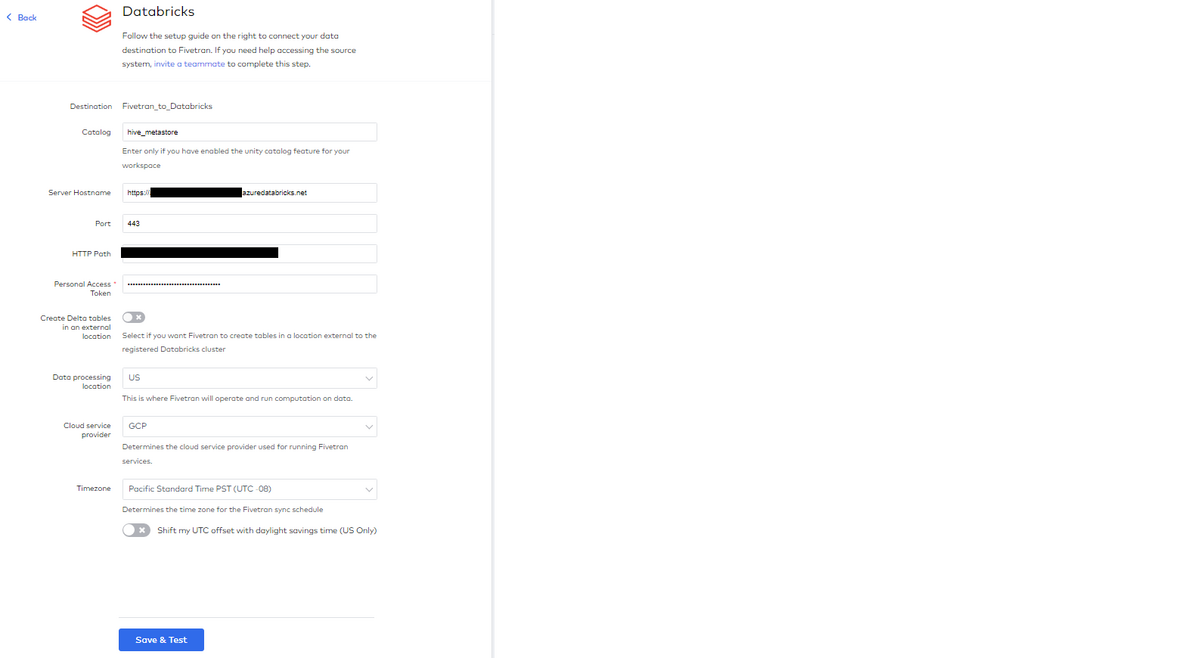

Here you need to enter Catalog/ Server Hostname/ Http Path/ Personal Access Token based on your Databricks settings. These settings are done on the Databricks side, so while keeping this window open, start Databricks in another window and move on to the next step.

Part2:Working @Databricks

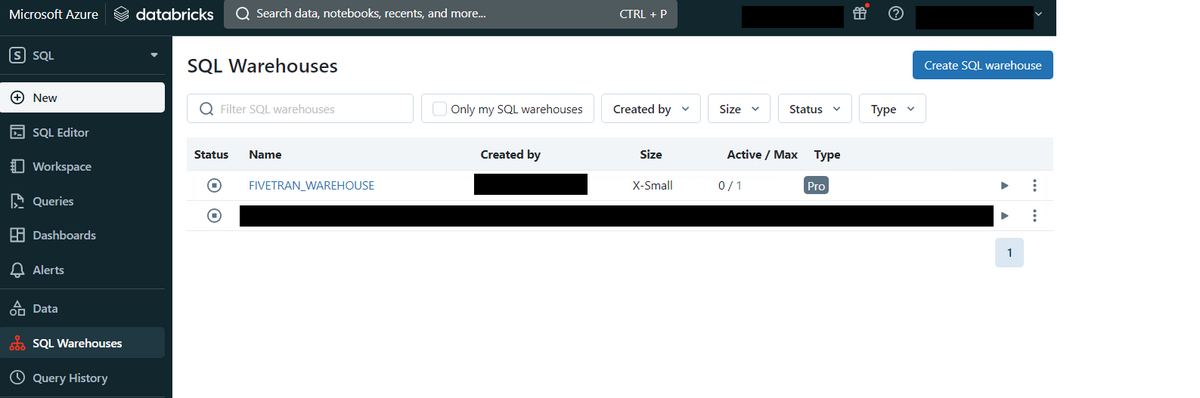

In order to browse the Catalog on Databricks, it is necessary to create a corresponding compute node. Create a compute node called "FIVETRAN_WAREHOUSE" in SQL Warehouse.

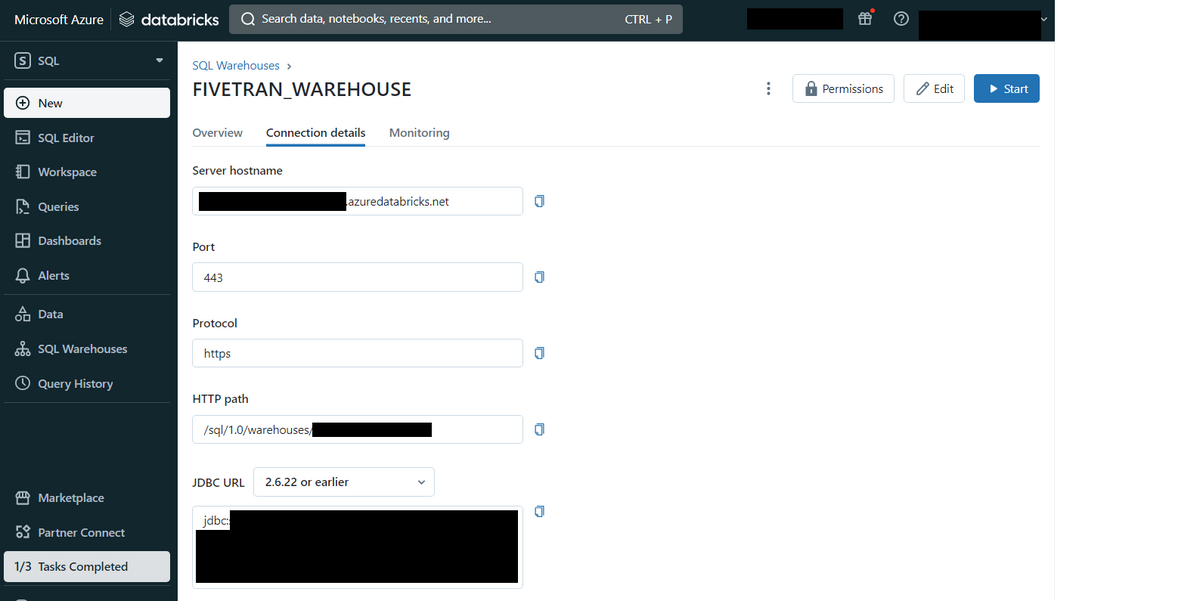

Next, click on the created "FIVETRAN_WAREHOUSE" to see the "Connection details" information. Enter the displayed "Server hostname" and "HTTP path" information as it is on the Fivetran side.

Next, click on the created "FIVETRAN_WAREHOUSE" to see the "Connection details" information. Enter the displayed "Server hostname" and "HTTP path" information as it is on the Fivetran side.

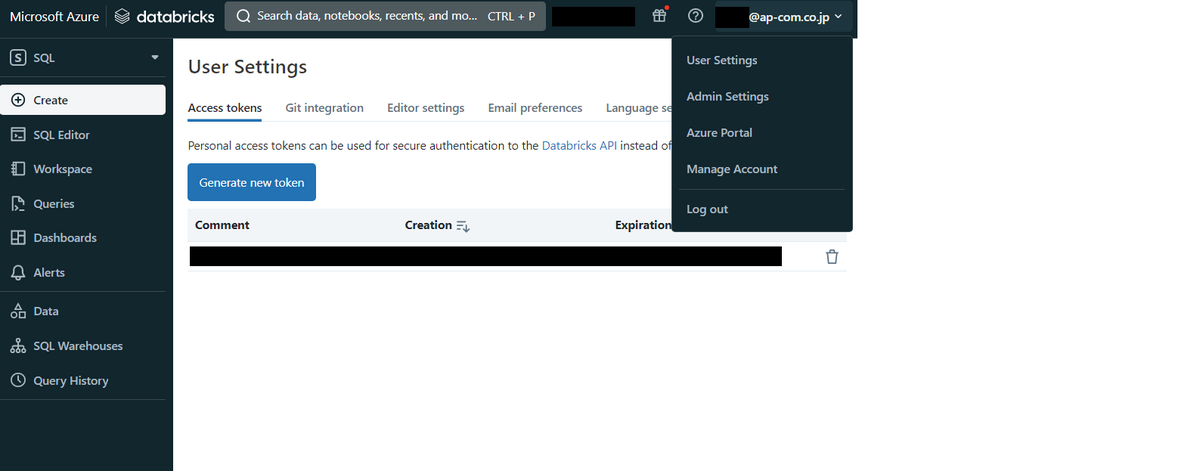

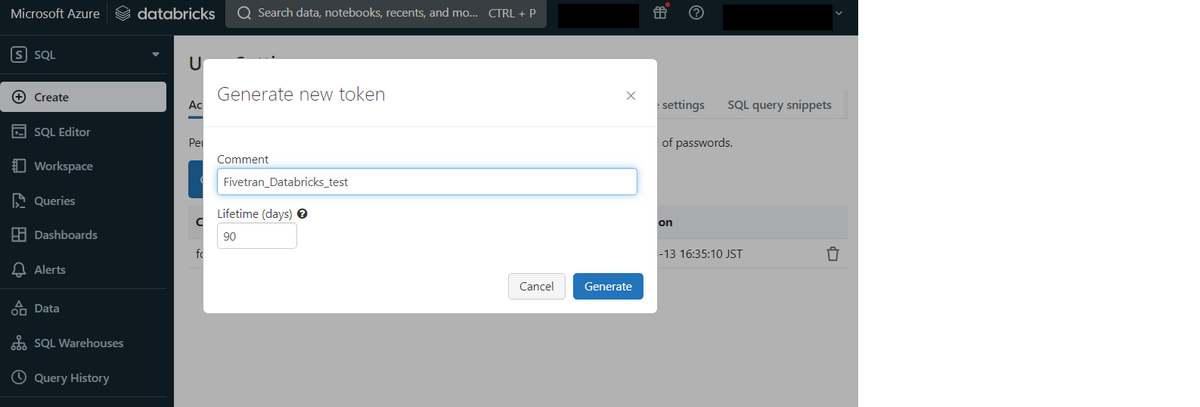

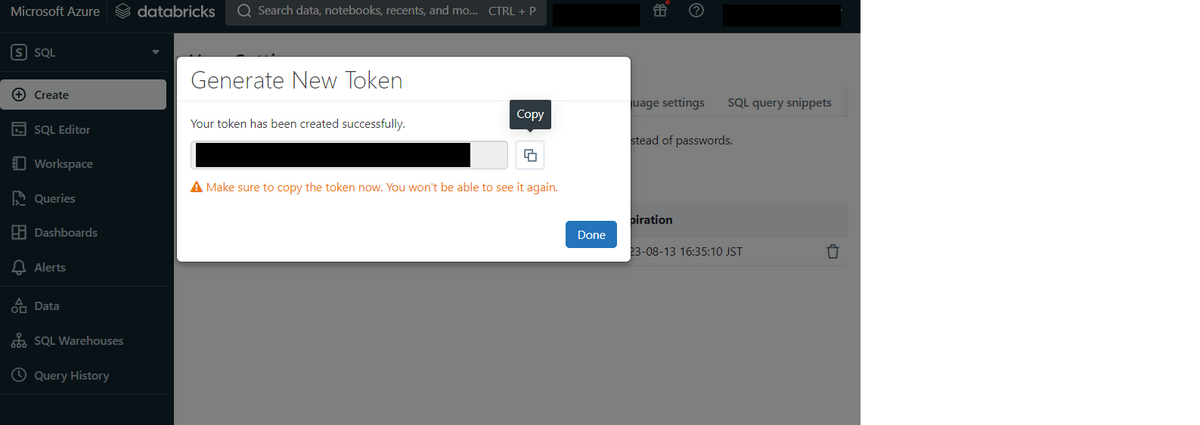

Press the "Generate new token" button to display the "Generate new token" pop-up screen. Set the comment (Comment) and the expiration date (Lifetime (days)) and press the "Generate" button.

A new Token will appear. Press the "Done" button to complete the token creation. Note that this Token will only be displayed once, so make a copy and keep it in another location.

Part3:Work @Fivetran

Enter the Token created by Databricks and the Server Hostname/ Http Path information displayed in the compute node "FIVETRAN_WAREHOUSE/ Connection details" on the Fivetran setting screen. The settings are now complete. Press the "Save & Test" button to see if the connection was created.

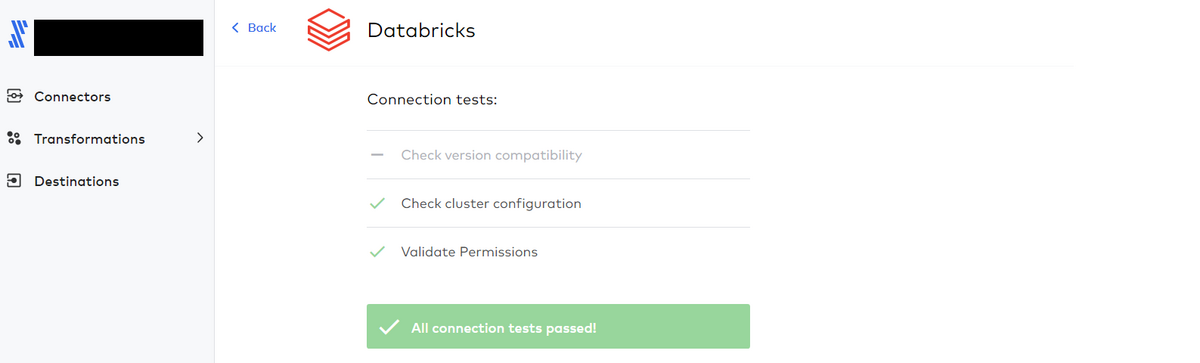

The connection was created successfully, passing the test checks.

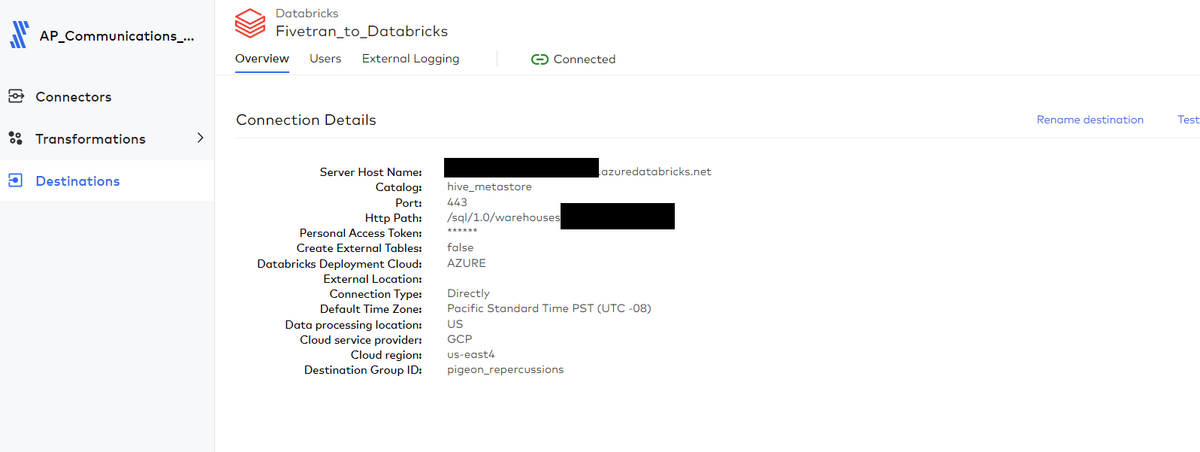

And you can check the information of the created connection from Destinations.

Transfer data to Databricks

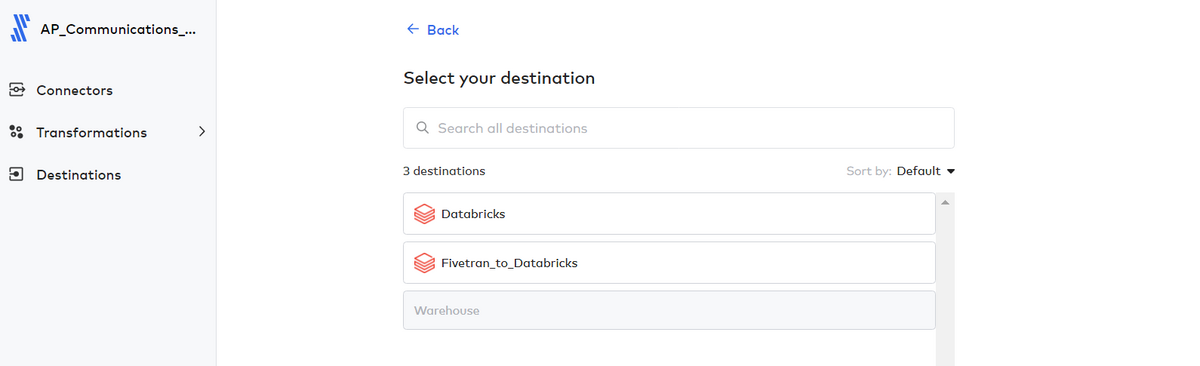

After preparing a google sheet for testing( reference for creation), move on to the next step. Click “Connectors” in the left sidebar, press the “Add Connector” button in the upper right, and select “Fivetran_to_Databricks” as the Destination to create a connector.

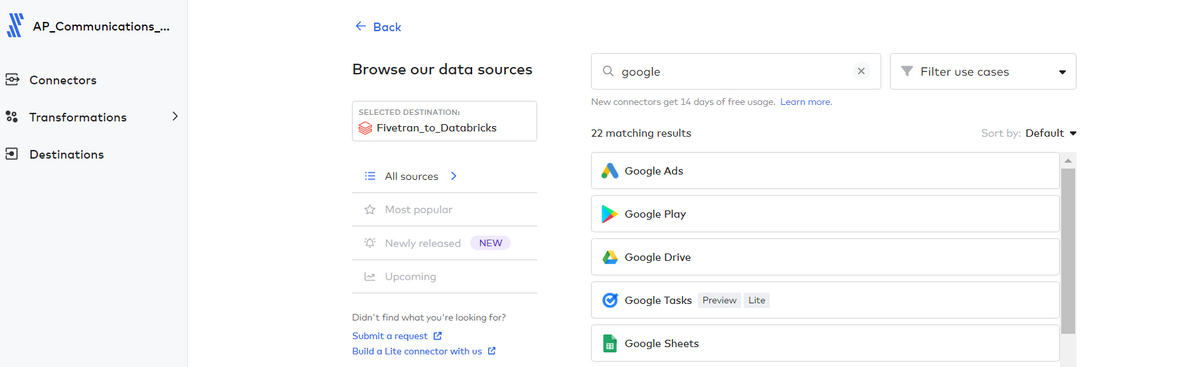

Select Google Sheets as the data source.

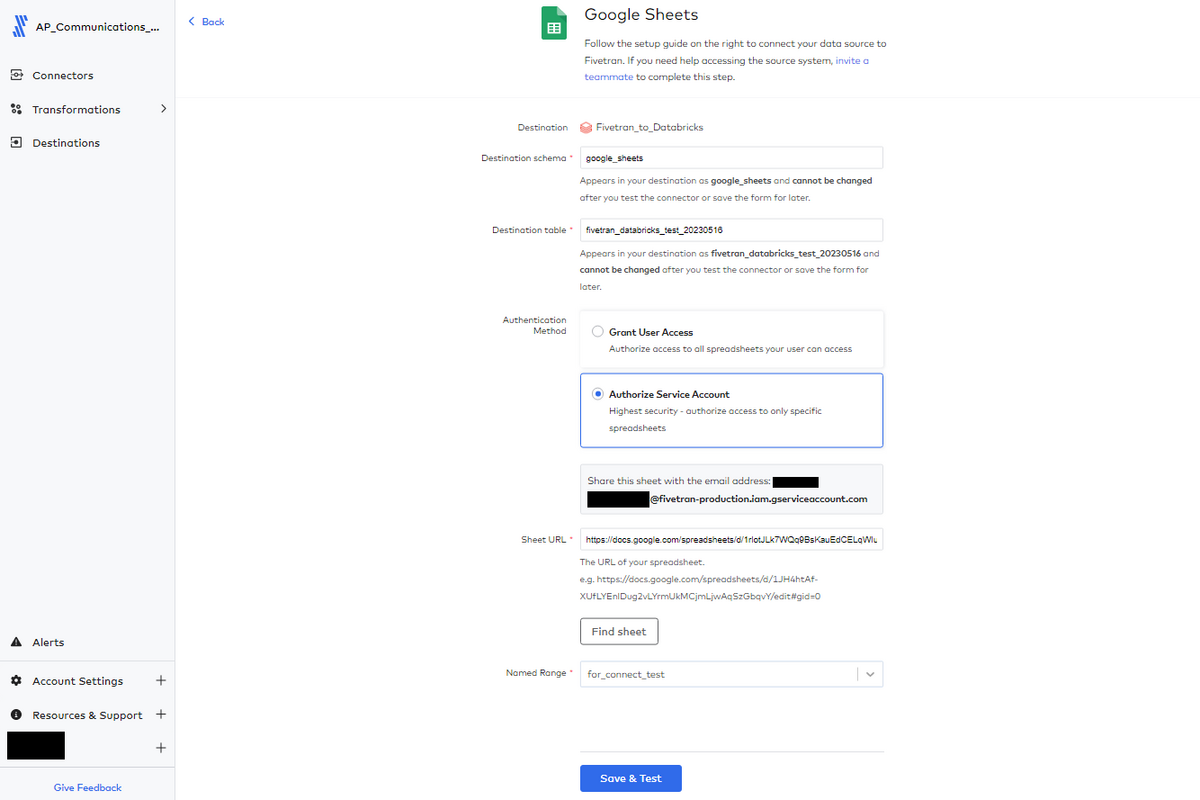

Enter information about Google Sheets and press the "Save & Test" button.

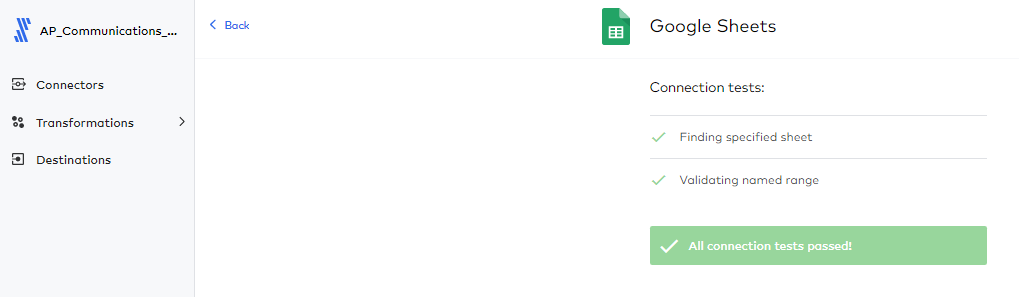

Passed the test without issue.

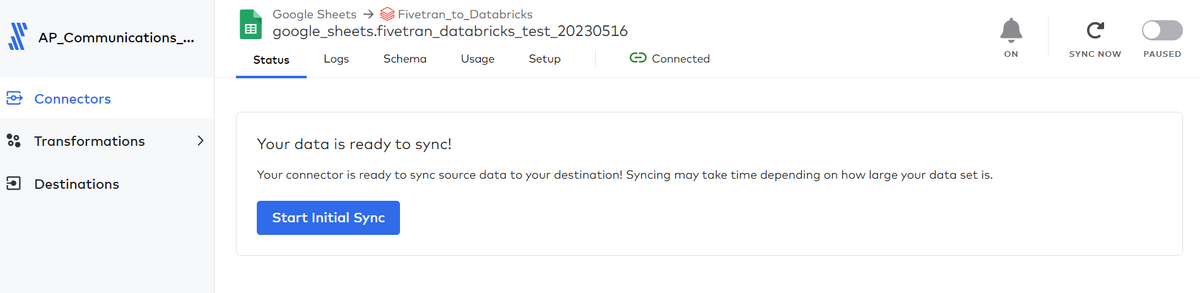

Press “Start Initial Sync” to start data transfer.

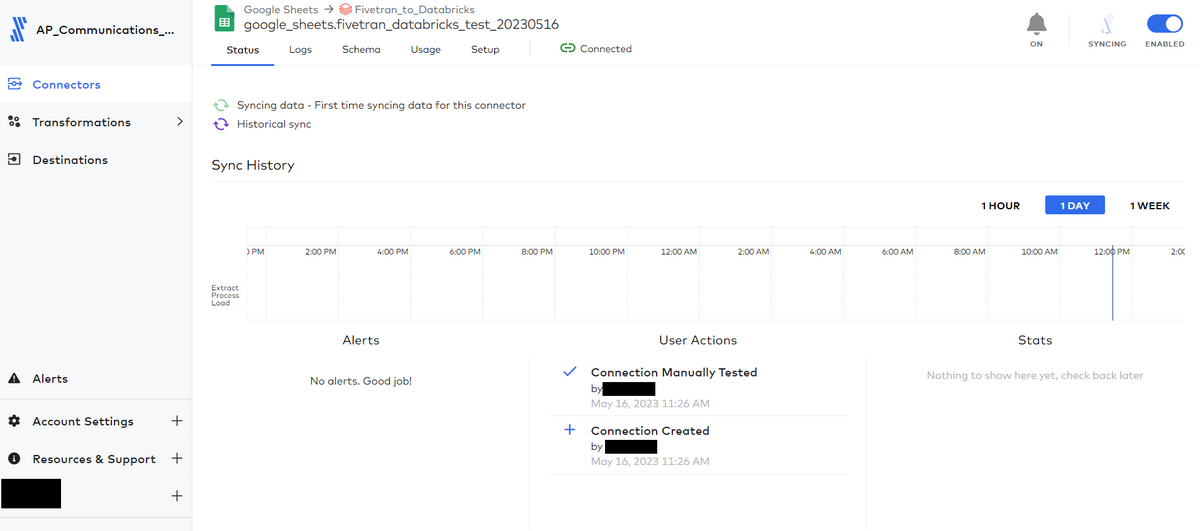

Data transfer in progress. This work may take some time to start the compute node when transferring data to the Databricks side.

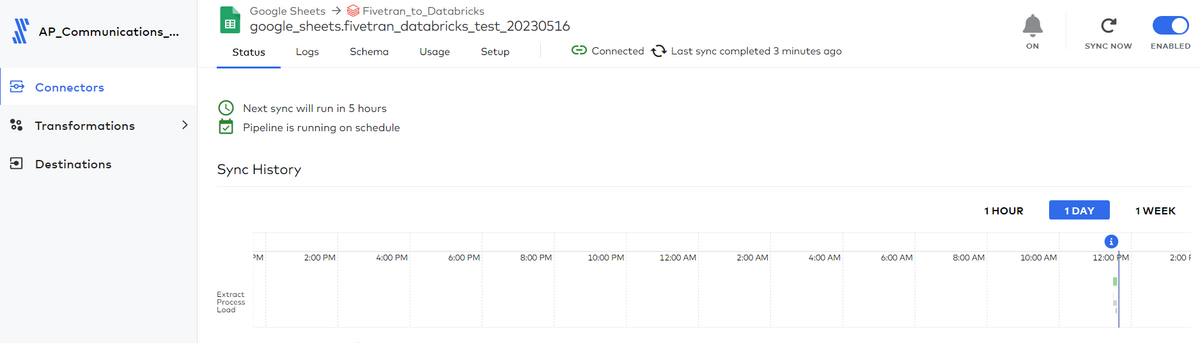

Data transfer completed.

Confirmation in Databricks

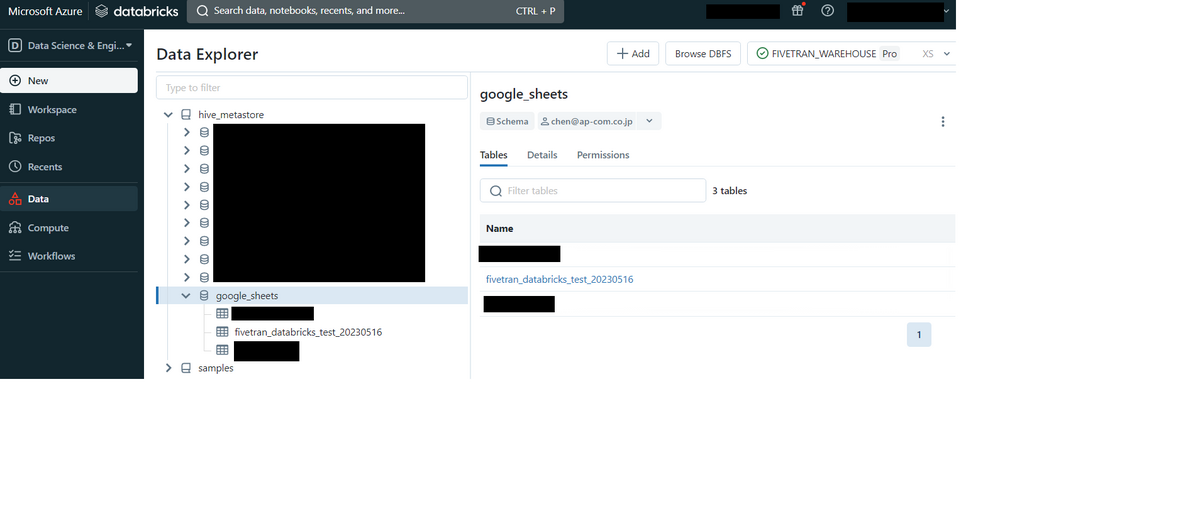

Under Data Explorer, hive_metastore/google_sheets/fivetran_databricks_test_20230516 has been successfully transferred.

Conclusion

That's all for this article. How was it? It will take a little time, but we hope that it will be useful as another reference when the connection between Fivetran and Databricks presented by our company Matsuzaki cannot be created for some reason.

We provide a wide range of support, from the introduction of a data analysis platform using Databricks to support for in-house production. If you are interested, please contact us.

We are also looking for people to work with us! We look forward to hearing from anyone who is interested in APC.

Translated by Johann