Defining the Challenges and Current Landscape

The landscape of AI and ML technologies is ever-evolving, with new challenges continuously emerging. This session focuses on the current state of these technologies and their environmental impact. It's crucial to recognize the achievements made so far and identify ongoing issues.

Differences Between ML and AI and Their Implications

Though AI and ML often appear interchangeable, they each serve distinct meanings. AI represents a broader concept, within whose boundaries ML operates as a subset. AI aims to mimic human decision-making processes, whereas ML concentrates on learning from data and making predictions. This session will clarify how these differences impact technological choices and business strategies.

- Defining the Challenges and Current Landscape

- Addressing Challenges and Directions for Improvement

- Regional Adaptation and Community Engagement

- Section: Monitoring and Technological Integration

- Performance Evaluation and Optimization

- About the special site during DAIS

Addressing Challenges and Directions for Improvement

Defining specific challenges is imperative, and this session does not hesitate to propose specific improvement strategies. It emphasizes the need for enhancements not only within technical realms but also in operational procedures. The suggestions include streamlining methodologies for more efficient operation of large language models and increasing the reliability of unpredictable systems.

Deep understanding and confronting AI and ML challenges are crucial. Through thorough examination, this session substantially contributes to strategies aimed at transitioning from uncertainty to more deterministic approaches.

Regional Adaptation and Community Engagement

This section focuses on the development and engagement of local communities, as well as regional adaptations of emerging technologies. Key insights on Large Language Models (LLM) were notably discussed.

1. Community Development:

- Progress has been notable in both urban and rural areas, with these regions achieving in the past 18 months what previously took over a decade. This suggests an accelerating pace of growth, indicating further potential for the future.

2. Enhancing Existing Models and Creating New Ones:

- Discussions highlighted the importance of strengthening existing models over merely increasing their number. Each model varies in complexity and application, with significant enhancements expected over the next 15 years.

3. Importance of Data-Driven Decision Making and Self-Regulation:

- The importance of utilizing data-driven models and maintaining self-regulation was emphasized. These practices are crucial for making accurate decisions that continuously benefit the community in the long run.

4. Potential of Civic Democracy:

- Civic democracy may be dull at times, but it remains a beneficial model, recognizing the importance of understanding detailed complexities within broader systems.

5. Recognition of Delays and Accountability:

- Different models demonstrate unique responses, necessitating an understanding of both delays and accountability. This awareness is currently growing among organizations that are beginning to emphasize transparency and prompt responses.

Local communities have emerged as significant players in technological evolution and continue to make critical contributions to broader advancements. This trend is expected to remain a focal point moving forward.

Section: Monitoring and Technological Integration

The session titled "From Uncertainty to Certainty: Deterministic LLMOps Strategies" centered on improving the reliability of unpredictable environments involving Large Language Models (LLM). Key aspects discussed include the critical roles of technological integration and effective monitoring in managing these systems.

Venturing into new technologies requires deep practice and understanding. Various innovation hubs showcased different methods, encouraging AI and ML model providers to intensify their efforts on LLM-specific applications. We actively take steps to integrate and leverage best practices in both AI and ML domains.

A major topic of interest was "Model Outlet Adjustment". This topic attracted numerous questions regarding altering outlet designs in non-parametric mathematical systems. Understanding these complex mathematical models is essential, requiring continuous engagement and problem-solving skills akin to advancing mathematical challenges.

Mastering LLMOps involves similarly tackling complex mathematical issues, necessitating sustained learning and rigorous monitoring to ensure reliable outputs. The inherent complexity of these systems highlights the need for stringent and continuous monitoring.

The discussions emphasized integrating cutting-edge technological solutions and rigorous monitoring processes to ensure effective and reliable operation of LLMs. As technology advances, the challenges it brings can also be surmounted with solid dedication and strategic approaches.

The "Data Integration and User-Friendly Approaches" segment was highlighted in the session, emphasizing the importance of seamless data integration into LLMs, alongside designing user-friendly content, efficient data handling, and robust database management systems.

Procedures for LLM data integration include:

Extraction and Online Management: This initial stage involves collecting necessary data and organizing it to facilitate efficient online management, ensuring appropriate access to the right data at the right time.

Embedding Data into Methods: After data collection, focus shifts to embedding this textual information into specific computational methods. This integration is vital for preparing data for effective processing in subsequent stages.

Integrating User-Friendly Content: Designing content that is intuitively understandable and accessible to end-users is emphasized. This content is integrated within the model to enhance usability and accessibility.

Model Selection and Application: The final step involves selecting the most appropriate model and applying the processed data. This stage significantly impacts the practical application of the model, affecting its performance and reliability.

Following these meticulous procedures ensures organizations can guarantee more reliable operations of LLMs. Mastering data indexing, column management, and navigation protocols dramatically improves the contextual application of these AI-driven systems.

Insights acquired in this session on implementing data integration and user-friendly approaches are essential for optimizing projects involving LLMs. Adhering to data management best practices and strengthening the database framework are crucial for maintaining consistency and reliability in inherently non-deterministic systems.

Performance Evaluation and Optimization

The followings focused on managing the uncertainties brought by the non-deterministic nature of Large Language Models (LLM).

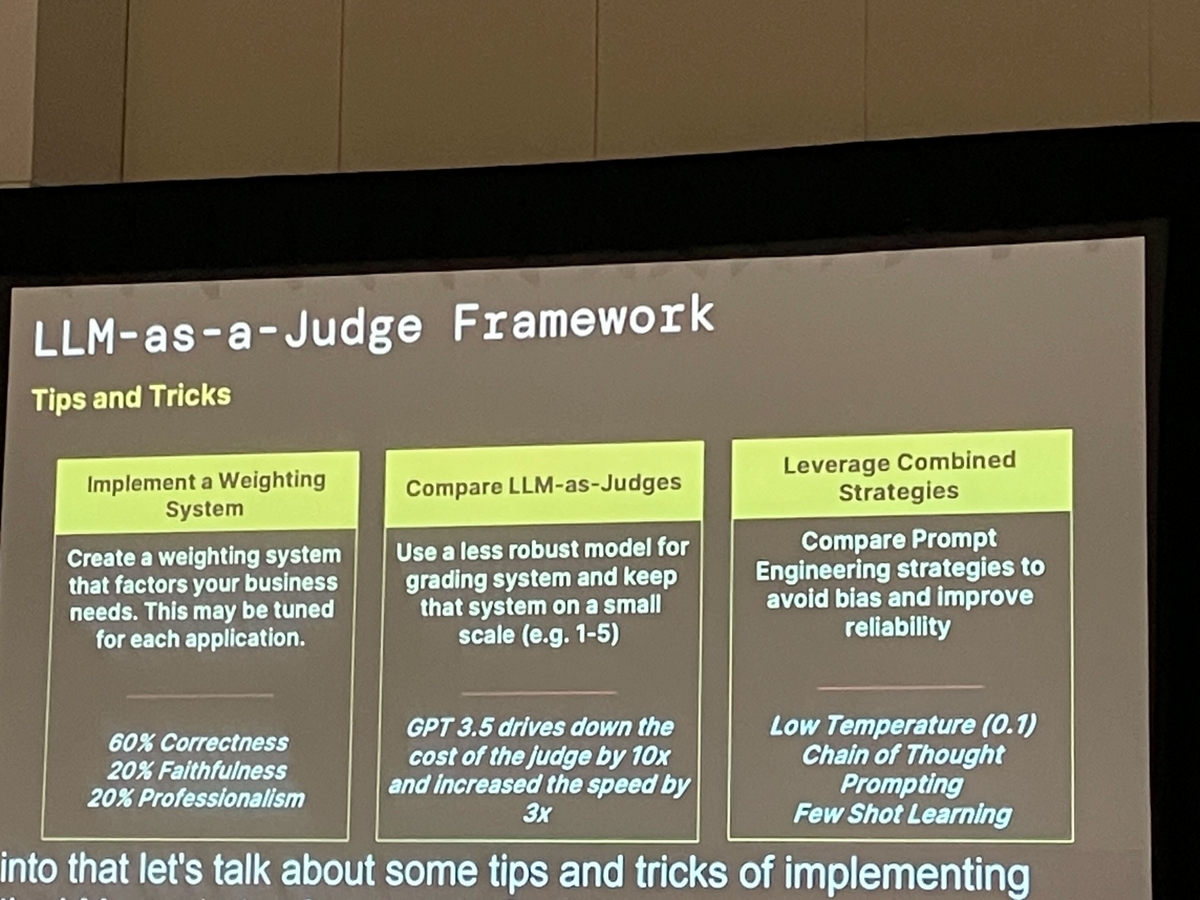

Utilizing LLM as a Proxy

Given that LLMs, by nature, do not guarantee identical outputs for the same input, special strategies are necessary to ensure reliable results for business and critical operations. This session explored how LLMs can be used as a proxy for human effort in generating data items. By leveraging the collective strengths of AI, ML, cloud technologies, and digital tools like Blackboard, LLMs can mimic human-like decision-making processes and effectively optimize data management tasks.

Understanding Context in LLM Outputs

Much of the discussion was dedicated to the importance of context in LLM outputs. For instance, if an LLM were assigned the task of managing a book collection, it would be crucial not only to properly categorize these books but also to understand the thematic and contextual elements defining them. This context-aware processing ensures that the outputs are relevant and accurate, making LLMs more effective.

Optimization Techniques and Metrics

The session focused centrally on technologies for optimizing LLM performance, which included establishing robust metrics capable of quantitatively evaluating the effectiveness and precision of LLM outputs. Metrics such as accuracy, speed, and relevance of the generated content were discussed. Using these metrics allows for fine-tuning LLMs to provide more predictable and reliable outputs, essential for deployment in sensitive or critical business scenarios.

Conclusion

The session concluded by emphasizing that transitioning from uncertainty to certainty in handling LLMs involves rigorous procedures of performance evaluation and continual optimization. The systematic approaches discussed not only support mission-critical applications but also enhance overall resilience and efficiency in business operations, effectively bridging the gap between unpredictability and reliable performance in dynamic environments where LLMs operate. This ensures that Large Language Models remain a potent and practical tool in the evolving landscape of digital and artificial intelligence technologies.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.