Preface

In the process of developing Terraform Providers and Python SDKs, UCX amassed invaluable knowledge around building robust Python applications on Databricks.

- Preface

- The Importance of Regular Integration Testing

- The Power of Pytest Fixtures

- Debug Mode and Multi-Cloud Testing

- Static and Dynamic Pytest Fixtures

- Maintaining Test Consistency

- Configuration Management Using YAML or JSON Files

- The Importance of Database Migration

- Simplification of Parallel Execution

- Advanced Code Migration and Performance Optimization

- About the special site during DAIS

The Importance of Regular Integration Testing

The session emphasized the value of running all tests every morning and reporting on non-recoverable error bugs. It also suggested integrating tests into all pull requests and running them nightly as part of a release band-out. This approach aids in early detection of issues that could potentially lead to client-facing problems.

The Power of Pytest Fixtures

The presentation accentuated the use of the pytest framework for efficient testing. Detailed explanations were given for tests that required 20 different settings per service principal and didn't require database integration or external tables.

Debug Mode and Multi-Cloud Testing

Further discussion was offered around executing tests in debug mode. The speaker outlined the process of loading environment variables from a special file dedicated to debug mode. Examples of UCX running on both Azure and Amazon Workspaces illustrated how this process allows testing different settings in diverse environments, demonstrating that the system can adapt to a developer's unique situations.

Static and Dynamic Pytest Fixtures

The distinction between the two types of pytest fixtures, static and dynamic, was clarified. It was disclosed that static fixtures are passed as environmental variables in integration tests, and dynamic fixtures are created by pytest.

Maintaining Test Consistency

Another segment emphasized the importance of maintaining test consistency by ensuring appropriate clean-up, even if tests fail due to unrecoverable issues. The need to implement systems for garbage collection of data frames and other elements was mentioned as a means to achieve test consistency.

In conclusion, the session shed light on the benefits of a deep understanding of testing and multi-cloud strategy and potential for developing more robust and efficient Python applications on Databricks. These strategies ensure the quality and success of large Python projects like UCX and show the practical application of these ideas in real-world scenarios.

Configuration Management Using YAML or JSON Files

In the development of Python applications on Databricks, configuration information often gets stored in YAML or JSON files. However, executing complex tasks such as altering or deleting field names can be challenging. To tackle this, there is an innovative technique used by Terraform called 'State Upgraders’. This method essentially facilitates the evolution of configuration file formats by performing conversions on dictionaries - transforming them into different dictionaries and then saving the results in a file.

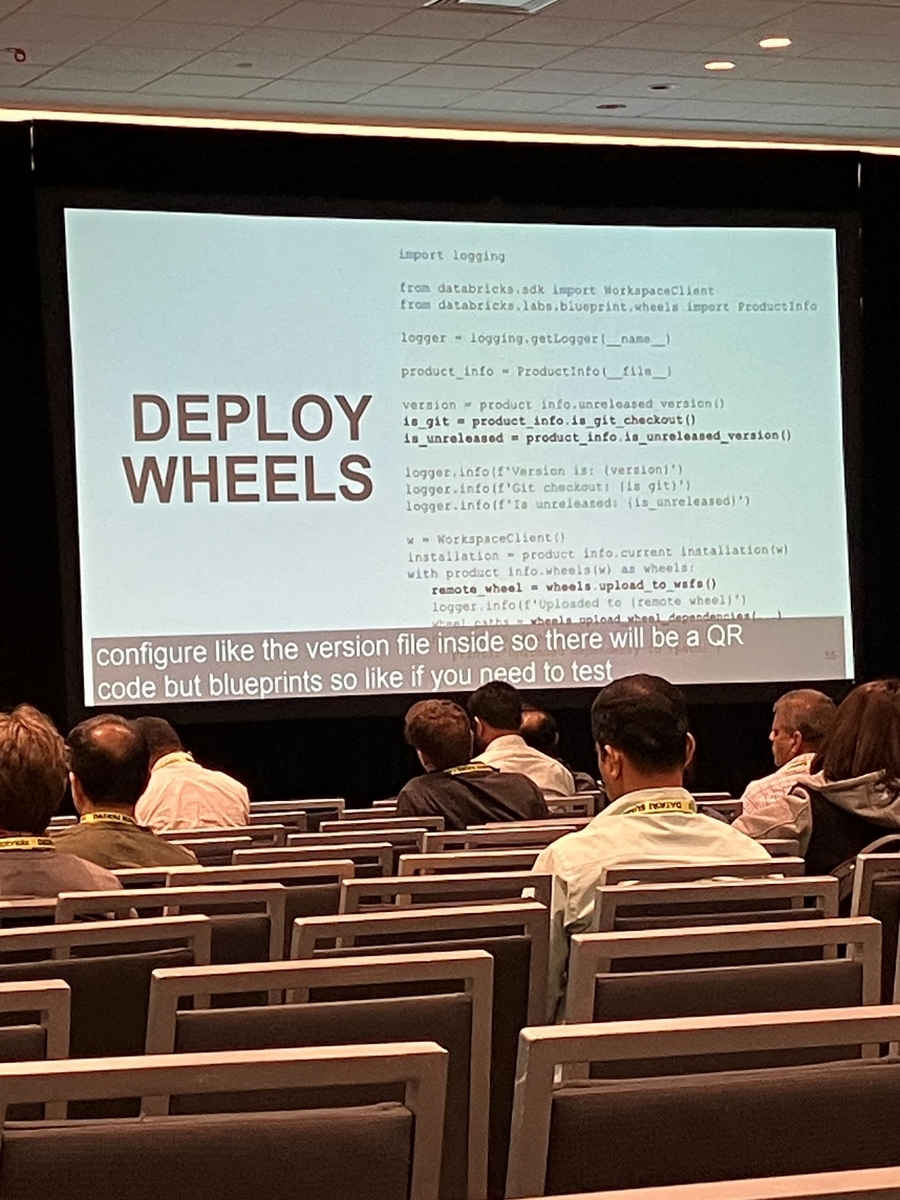

The Importance of Database Migration

Maintaining consistency and precision is critical when you're dealing with database migrations in Databricks, such as when adding columns or changing settings. Automating these tasks is indispensable in ensuring seamless migration to production environments. At this juncture, particularly useful is Databricks Labs’ Blueprint Upgrader framework. This framework allows you to set scripts that run only once for each deployed version of your application.

Simplification of Parallel Execution

Parallel processing or multi-threading often becomes a must to execute the above tasks efficiently, which can typically be complex and prone to errors. To simplify this process, Databricks Labs offers a framework called 'Blueprint'. This framework simplifies parallel processing, smoothens the workflow, and potentially reduces execution time.

The next section delves into more advanced topics of application development in Databricks using the Python language, with a specific focus on performance tuning and debugging.

Advanced Code Migration and Performance Optimization

UCX presents solutions to network-related performance problems swiftly. For instance, when it's confirmed that Direct Data Transfer Services (DDFS) are being used directly, it provides specific insights such as, "DDFS is workspace-specific and is not suitable for cooperation with Unity Catalog due to lack of ACL. It is recommended to stop its use."

Moreover, it verifies whether already migrated tables to Unity Catalog are being used and suggests to stop their use. UCX is designed to house such checks in hundreds and identify and solve issues promptly, enabling faster migrations.

As for the issue of replacing already migrated tables, UCX tries to automate its code migration. This feature is expected to be introduced when focusing on lint to reduce the amount of error output. Indeed, the current UCX version, just three weeks after the release, includes several bugs.

Therefore, it’s recommended to utilize UCX to inspect how compatible your notebooks and Python libraries are with the Unity Catalog. This paves the way for a smoother and quicker code migration.

The use of UCX greatly enhances the development of Python applications on Databricks and considerably simplifies the code migration process.

To all participants, it's recommended to leverage UCX to assess how well your notebooks and Python libraries align with the Unity Catalog. Besides enabling smooth and quick code migration, this also enhances resilience in Python application development on Databricks and minimizes the labor of code migration.

Conclusion

The use of UCX has significantly enhanced Python application development on Databricks and streamlined the code migration process. Specifically, it can execute checks against DDFS and migrated tables, accelerating problem resolution when issues arise. Thus, the use of UCX can considerably boost both the process of Python application development and code migration. As the development and improvements of UCX progress, it's anticipated to drive further efficiencies and productivity enhancements.

About the special site during DAIS

This year, we have prepared a special site to report on the session contents and the situation from the DAIS site! We plan to update the blog every day during DAIS, so please take a look.